The end of the Internet has been predicted many times over the years. The most famous of these came in 1995 from Bob Metcalfe, one of the founding fathers of the Internet, when he predicted ‘a catastrophic collapse’ for the Internet by the end of 1996. As the story goes, he had to literally eat his words during the 1996 World Wide Web conference. Since then, more splashy headlines have been written about the ‘end of the Internet’, citing many different reasons:

- The technology has reached its limit: it is no longer technically and/or economically possible for the network capacity to keep up with the increase in traffic.

- The model is no longer sustainable: the exponential increase in traffic could result in infrastructures that consume energy at a level that future energy production would be unable to handle.

- The lack of security becomes unacceptable: A losing battle against the viruses, malware, phishing, denial of service attacks, and other threats that render the Internet downright unusable. Other possibilities come to mind, including a global technical outage or a coordinated attack that intends to destroy the Internet’s ability to function properly.

- The Internet is no longer global and open: Sovereignty issues due to the generalised surveillance could lead to the fragmentation of the unified global Internet into two (or more) speeds or into regional or national Internets with little to no ‘free circulation’ of data between regions.

So, due to the ‘exponential’ growth in traffic, will the ‘end of days’ come for the Internet by 2023? Such is the prediction laid out in a recent article of Andrew Ellis, a British academic specialising in optical transmission. The article was extensively cited in the press, as is often the case when doomsday predictions are involved. Professor Ellis talks about a ‘capacity crunch’ stemming from the ceiling for optical transfer speeds via fibre, a limit that may prove to be insurmountable.

The backbone of the Internet: it all comes down to cables

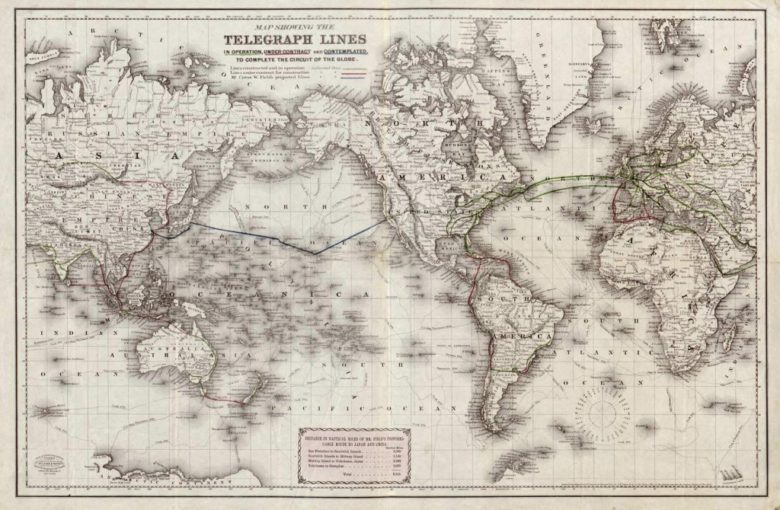

Almost all Internet networks that transport information over long distances are made up of optical fibres. Undersea or overland cables thus make up the backbone of the Internet. These fibres began replacing undersea coaxial cables and radio-relay networks in the late 1970s. These same technologies had replaced the telegraph lines that formed the 1st ‘global Internet’ (the telegraph) and encircled the globe in 1870.

The telegraph network in 1870: a Victorian version of the Internet

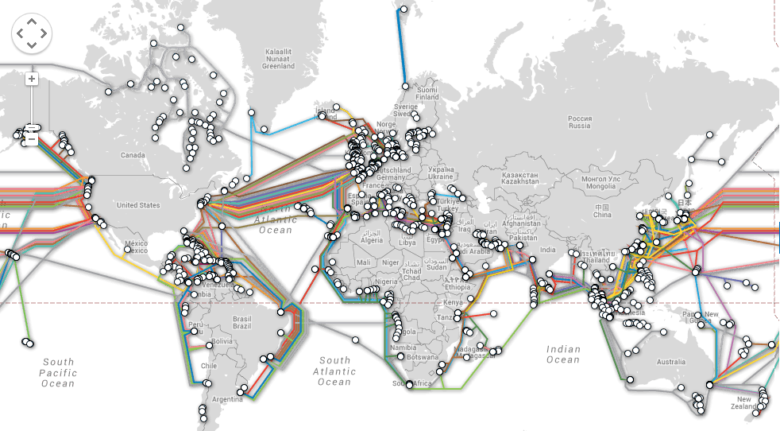

Map of undersea optical cables

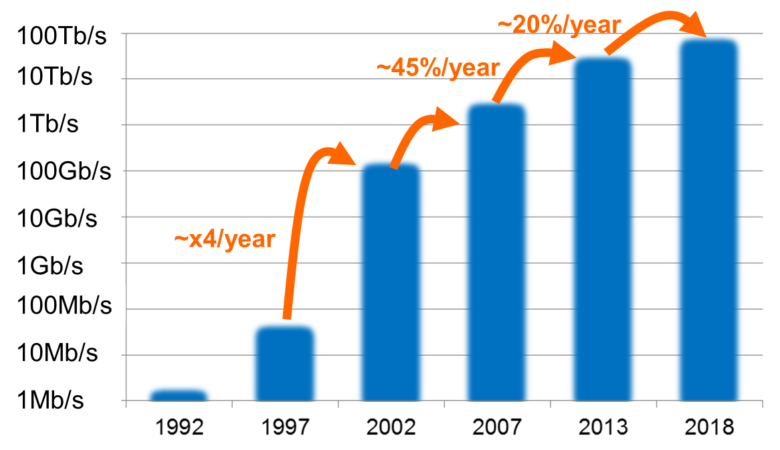

Since its beginnings in the late 1980s, Internet traffic has increased rapidly. In 1992, estimates put worldwide traffic at around 100 GB per day. In 1997, it had gone up to 100 GB per hour and in 2002 it was 100 GB per second. In 2014, 100GB were exchanged over the Internet every 400th of a second! This 20-million-fold increase, which took place from 1992 to 2015, is now happening at a rate of 35-50% per year. The forthcoming years, the forecast is around 20-25% per year.

Growth of global Internet traffic

Compiled from Cisco [3-5], Global IP Traffic Forecast and Methodology, 2006-2011 and Visual Networking Index: Forecast and Methodology, 2013–2018.

Two avenues have been explored to handle this growth:

The first of these involves augmenting the carrying capacity of a fibre, accomplished through the ingenuity and efforts of researchers and engineers who have worked tirelessly to push beyond these limits. Each optical wavelength carried 2.5 Gbps in 1990. Beginning in 1990, Wavelength Division Multiplexing (WDM) made it possible to carry several wavelengths in each optical fibre. In addition to using optical amplification, WDM facilitated a major increase in optical fibre speeds over very long distances. This technology made it possible to reach 10 Gbps per wavelength in 1996 and today operational speeds are up to 100 Gbps per wavelength.

The second solution involves simply increasing the number of cables, and therefore fibres; investor consortiums are financing the laying of new undersea cables. Telecom operators are rolling out overland loops that link major cities at the continental level.

Any exponential growth, outside of abstract mathematical spaces, will eventually reach a limit. For Orange, which is laying undersea cables with its cable ships and which operates a global network of 450,000 km of fibre (which could encircle the globe more than 10 times), it is important to know how quickly we will reach the fundamental limits of optical transmission.

The limits of optics

To begin with, we should clarify that the ‘capacity crunch’ problem does not apply to ‘La Fibre’, which today provides Television, Internet, and Telephone services to more than 4 million connectable customers in France. The Fibre to the Home (FTTH) raises questions about roll-out costs, but the bit rates meet the requirements of single customers (typically 100 Mbps to 1 Gbps), who are able to enjoy the full capacity of a fibre cable for their own use.

The problem has to do with the Internet’s core information traffic network, where the aggregated traffic of millions of customers is exchanged between Europe and the USA or between two major French cities.

Those interested in this topic can read an interesting and accessible article [6] that gives an overview of the issue of the limits of optical transport.

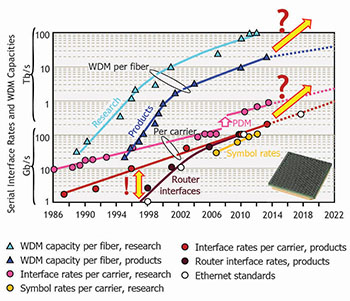

Improvements in optical transport performance (Source Winzer 2015 [6]). Operational limits per wavelength (red circles) and per fibre (dark blue triangles)

The WDM systems that have already been deployed use 80 different wavelengths within each fibre. In the current network architecture, each wavelength transports 100 Gbps for a sufficient distance (range of several hundred km). Each fibre thus has a capacity of 8 Tbps (80×100 Gbps). Based on this fact, our researchers are looking at two timeframes.

In the short term, the goal is to continue improving bit rates without changing the fibres that are currently being used or modifying the existing architecture of transport networks. This means using increasingly advanced digital technologies (higher order QAM, Nyquist filtering, advanced digital signal processing, error correcting codes, optical amplification, etc.). The next generation of WDM systems will probably be able to transport approximately 20 Tbps per fibre, though with a smaller transmission range. The uppermost limit has been established at 75 Tbps for distances in accordance with our network architecture. These approaches have begun to reach their limits, as we get even closer to the Shannon limit. Reaching these kinds of capacities could lead to more flexibility, for example with regard to occupying the optical spectrum (a new flexible arrangement for wavelength grids that are currently fixed and spaced at 50 GHz). Other techniques, such as multi-band transmission, are also seen as possibilities for providing more flexible and effective management for optical bandwidth. Another approach involves using several parallel WDM systems (e.g. 80×100 Gbps) on several fibres available within a given cable.

When the limits of the current fibres are reached, it will be necessary to be able to continue supporting traffic growth while keeping costs under control. To prepare for this transition, researchers are now working on new optical media and/or a very different network architecture. There are many proposals, most of which are based on using ‘multi-core’ and/or ‘few-mode’ fibres. Multi-core fibres are manufactured to create several parallel independent optical channels. Few-mode fibres make it possible for several parallel optical flows to coexist within a single fibre. All these techniques have been demonstrated in research, but fibres with ‘several spatial modes’ and related technologies (optical amplifiers, spatial MUX/DMUX, ROADM, etc.) are not yet mature. It would therefore be rather premature to think that this solution could be used if the need arose. At any rate, technical advances must make it possible to increase overall capacity by at least two orders of magnitude (x100) in order to justify the roll-out of a new generation of fibre (as with performance gains obtained by replacing coaxial cables with optical fibres).

Making various technologies work together to handle the growth in Internet traffic

Optics is not the only technology whose advances will make it possible to transport the growing Internet traffic. Orange’s research, and that of the industry as a whole, is working to address the problem from a number of different angles:

It is possible to improve the performance of physical transport technologies (fibre optics or radio) or reduce the quantity of information needed to transmit a given video or content. New video codecs like HEVC, which Orange helped creating, require 2 times less network capacity to transmit the same video quality.

There is talk of optimising data placement: by pre-positioning the most in-demand content near the consumer, it is possible to statistically reduce the need to transport data over long distances. This is done thanks to caching (Content Delivery Networks).

Another possibility involves overall architecture optimisation, creating fixed-line/mobile convergence via next generation points of presence (which we have described in this blog), signalling, and overall energy requirements for the Internet as a whole.

Finally, it remains possible to roll out new transport capacities by maintaining permanent investments in new infrastructures and laying new cables.

So, will the Internet come to an end in 2023?

Researchers worldwide seem to agree that the traditional single-mode fibres that we have, in the last few decades. rolled out in our optical transport networks are getting close to their capacity limit . We are rapidly approaching this unavoidable limit, which will be about 75 Tbps per fibre.

Predictions of a (single and inevitable) ‘capacity crunch’ are premature, however, and it is unlikely that the Internet will collapse on a particular day in 2023.

In fact, the Internet was created to function without collapsing, and many solutions are being studied to overcome these limits.

In order to keep ahead of the growth in traffic, our companies and the public sector must continue investing in research, and researchers must continue using every means at their disposal, especially when it comes to developing networks that are significantly more energy efficient. The Internet has attained the status of a shared good that is so essential for humanity that it would be dangerous not to invest in making it sustainable.

Researching and finding technically and economically viable solutions in response to the traffic growth is a bit like playing Pacman: if you stop moving forward, the ghosts will catch up to you. Speaking of which, do you know about the strategies of Pacman ghosts?

Ecosystem:

Saser: a “hero experiment”

On may, 26th 2015, the SASER european project -to wich Orange researchers contribute- has just carried out, a world’s 1st: 1 Tbit/s per “super-channel” through the 762km of a Lyon-Marseille- Lyon round trip. This field trial has used the most advanced transmission technology: QAM-32 modulation, soft-decision advanced error correcting codes, hybrid Raman / EDFA amplifiers. A super-channel uses 4 wavelengths, each one transmitting, in this expermiment, 2.5x more data than the commercially available equipment currently deployed.

Amplificateurs optique Hybride Raman / EDFA