Summary

Orange has been conducting considerable research on 3D sound for about 20 years now. 3D sound allows the sound stage to be deployed outside the smartphone’s screen, all around the user, thereby potentially enhancing their immersion. However, most phones only have one speaker, making it impossible to spatialise a sound, even minimally. The focus is instead on the headset, which allows the deployment of a 3D sound technology of its own: binaural rendering that simulates an impression of sound spatialisation.

An experiment conducted by Orange research teams has shown that to deliver an audio-visual binaural experience, a person’s actual environment and distance from their phone do not matter. So, an app developer does not have to worry about the actual distance between user and phone, but simply has to place the user in a virtual space, where the phone’s camera would be. Binaural thus seems ideal for smartphones to roll out as a consumer feature and could be a way of enhancing user experience on mobiles.

Full Article

Binaural audio, a smartphone sound revolution?

3D sound provides an opportunity to deploy the sound stage outside the screen, all around the user, thereby potentially enhancing their immersion. However, such deployment raises questions about the perception of such a scene.

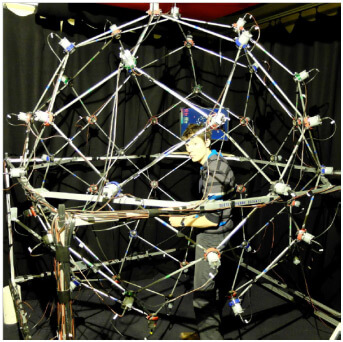

Orange has been conducting considerable research on 3D sound for about 20 years now. With the advent of smartphones in 2007, and the never-ending diversification and growth of consumed smartphone content (films, music, video games, videoconferencing, etc.), audio-visual quality has been a key focus. Although screen resolutions have improved to match those of fixed devices (TVs, computer screens, etc.), the actual viewing window has been confined to a reduced size, rarely measuring more than 16cm diagonally. 3D sound is a general term used to describe any sound-delivery system or technology that positions the sound source in several places. Stereophony (abbreviated to stereo nowadays) is the most well-known 3D system, as it uses two speakers, i.e., two sound channels, which can be directed to either speaker and even create the illusion that the sound is coming from in-between the two speakers. Other systems were also popular in recent decades, such as 5.1. Requiring five speakers, one in front, two lateral at the front and two lateral at the back, plus a speaker at the front for low frequencies (called a subwoofer), this system could horizontally place sound sources all around the listener. And by placing more speakers around, above, below, etc., you can multiply sound sources to deliver real surround sound for the listener (for example, the SphereBedev system (see picture below), a system developed by the CNAM sound laboratory in Paris, that uses 50 speakers).

The CNAM Paris SphereBedev sound system with 50 speakers

The problem is somewhat different on smartphones. Most phones only have one speaker (a few rare models have two, but the two are very similar), making it impossible to spatialise sound, even minimally. What is more, the many circumstances in which it can be used (especially when the user is moving) makes connecting to a group of external speakers tedious. This is why the preferred option is headsets, which work anywhere indoors or outdoors, whether the user is on public transport, moving or stationary, etc. Not only do headsets restrict the listening area to the user alone (ensuring those around them are not disturbed), they also allow the deployment of a 3D technology of their own – binaural rendering.

Binaural audio, like stereo, needs two channels: one for your right ear and one for your left. It relies on a person’s morphological characteristics to create the impression of the spatialisation of sound [1]. Just like stereo, binaural audio uses two location indices: Interaural Time Difference (ITD) and Interaural Level Difference (ILD). These two indices reflect the fact that a sound source emitted from a given location does not reach both ears at the same time, or with the same sound level. ITD and ILD vary depending on the position of the sound source. However, binaural audio also takes advantage of another peculiar feature of human hearing. The human ear is an organ that consists of cavities and bumps that resonate when a sound hits it, helping the hearer identify where it came from. These resonances modify the spectral shape of the source: the sound is emitted, it arrives at the ear, and is filtered by the direction from which it came. Each direction is associated with an ITD, an ILD, and a filter. Binaural audio consists in using this information to give the ear, via the headset, the impression that it is coming from somewhere else.

There are two ways of creating a binaural sound [2]. The first is simply to record a sound source on a binaural system, and play it back over a headset. Small microphones are inserted into the bowls of the ears to capture the sound enriched with all the location indices, ITD, ILD and filtering. You can also record a sound via an artificial head that houses a sophisticated recording system (see picture below). Note, though, that any recording by a person or artificial head is tailored to the listener. Any other listener hearing the sound through any other ears than their own, will potentially be less effective at locating sources. This degradation will depend mainly on their morphology and on where the sound is coming from.

Neumann artificial head – KU 100

The other solution is to create a binaural effect from scratch from a monophonic sound, by artificially applying location indices mapped to an individual and a spatial direction. This technique is called binaural synthesis. The advantage is that it lets you build a fictional spatialised sound stage, particularly for video games or films, when natural binaural can only capture actual sound stages. However, the disadvantage is that the location indices have to be measured beforehand for each individual’s spatial direction. This measurement process requires substantial preparation and equipment, and must be done in an anechoic room, inaccessible to the general public (see picture below). However, once their binaural profile is measured, a person can reuse it for any sound production.

Orange Labs anechoic room in Lannion with a binaural recording system

When building an audio-visual binaural sound stage, spatial consistency between audio and image is crucial. This is particularly so on mobiles, since we see a spatialised sound stage as an extension of what we see on the screen. The problem is thus one of perception, and can be put as follows: can a sound source be spatially separated from its corresponding visual sound source without breaking the semantic link between the two?

How human beings perceive audio-visual objects is not completely understood. Research has already revealed many effects specific to the association of a sound and an image. One of them is called the ventriloquist effect, and precisely affects our spatial perception: when two sources – one visual and one sound – are in different places but close to each other, we perceive them as being in the same place [3]. That perception is usually a visual location. There is therefore a spatial pull of the image on the sound. The ventriloquist effect gets its name from the practice that gives us the illusion that a voice is coming from a puppet’s mouth whereas it is actually coming from its puppeteer’s.

When using binaural with a mobile screen, the ventriloquist effect range becomes very important, as it gives us a quantitative indication of how far it can be projected beyond the screen without losing its semantic association with the visual.

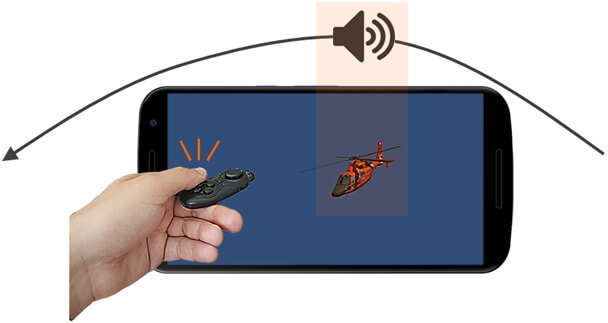

In technical terms, to measure the ventriloquist effect, we measure the audio-visual spatial integration window. This means measuring, for any given visual position, the set of sound positions where the sound and image are perceived as coinciding. An experiment conducted at Orange focused on measuring this integration window under the specific conditions of the binaural associated with a visual on a mobile phone [4]. The experiment consisted in asking subjects to look at a still image on the phone screen, while listening to a binaural sound that travelled on a horizontal circular path on the screen. Subjects had to press a button when they perceived a spatial alignment of sound and image (see graphic below). They were asked to provide the alignment for two types of sound trajectories: one from the periphery of their field of vision to the centre, and one from the centre outwards to the periphery. The two alignment points found thus form the two boundaries of the integration window, which we assume encompass the visual (we assume that the subject will detect an alignment always slightly before the sound hits the image) [5].

Illustration of experiment protocol: the subject presses when it perceives that the sound and image are aligned.

The protocol is split into two types of audio-visual stimuli: one semantic-consistent (visual of a helicopter with a rotor sound), and one semantic-neutral (bright halo with white noise). In this way, we would like to know how semantics influences the integration window.

Another parameter is added to the experiment: the height of the sound path. In the daily use of a smartphone, we can make the reasonable assumption that the user is holding the phone to their face. However, it is impossible to know in advance the height of the phone (how high a user will be holding their phone?), with the possibility for the height to change during the same viewing session. So, it is crucial to measure the impact of a change of elevation on the sound path of the integration window. We are therefore introducing two new paths located above the user’s head, in addition to the two at phone level.

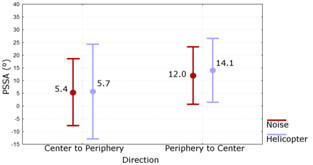

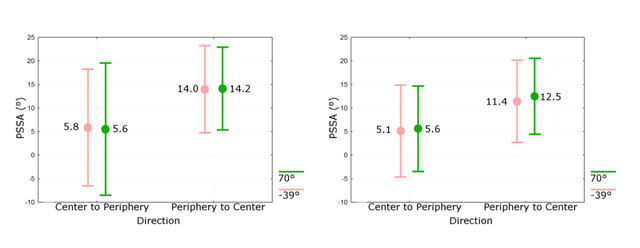

The results of this experiment show a number of things. In the three graphics below, the position of the alignment point is expressed as an ordinate by an angle in azimuth degrees, 0° being the central position facing the user, and 90° being the lateral position facing the user’s right ear. Firstly, we see that the alignment differs significantly depending on the type of trajectory (centre towards periphery or periphery towards centre), indicating that the subject is looking from inside where sound and image coincide, confirming the existence of an integration window. Secondly, this window is identical in all cases. The semantic difference and elevation do not impact the window. On the one hand, the ventriloquist effect is therefore not impacted by the type of stimuli, and on the other, the ventriloquist effect seems sufficiently strong in elevation to mask the potential spatial discrepancies between sound and image, regardless of the height at which the user holds their smartphone.

Alignment point detected between sound and image, expressed in azimuth degrees, for each trajectory and each type of stimuli.

Alignment point detected between sound and image, expressed in azimuth degrees for each height (helicopter stimuli on the left, white noise and luminous halo on the right).

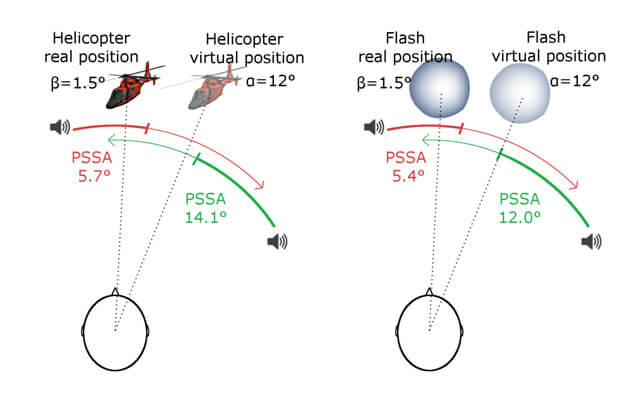

The last important result, contrary to the assumption made, is that the integration window is not centred on the visual. As shown in the graphic below, the window is systematically displaced towards the periphery. However, it includes the position of the visual in the virtual stage.

The integration windows for both stimuli are not centred on the actual position of the visual, but on its position in the virtual stage.

The virtual stage is the stage in which all visual objects that have been captured by a camera were positioned, before being “flattened” and retransmitted to the screen. We can easily imagine, for example, a film scene, with its backdrop, props, and characters moving around it. Everything is recorded by a camera for later transmission to the screen. In the graphic below, the left part represents the virtual stage in which the visual is positioned. Here, a helicopter is displaced by the camera at a horizontal angle alpha. The camera records the image, with its own optical properties, which distort the scene to flatten it. These changes convert the angle alpha into a distance d that separates the helicopter from the centre of the screen. Lastly, the subject on the right sees the helicopter retransmitted as being displaced horizontally by angle beta relative to the centre of his face.

Virtual stage transmission process, from its capture by camera to its reception by viewer

To come back to the displacement of the integration window, we interpret it as follows: the subject does not consider the position of the helicopter with angle beta, which is, however, the angle whereby he physically observes the visual, but with angle alpha. In other words, the subject projects himself into the virtual stage, as if he were where the camera is. This result is very interesting, as it is a direct mark of the subject’s immersion. It also seems to suggest that to build an audio-visual stage with binaural, a person’s actual environment and distance from their phone do not matter.

To sum up, we can express all these findings by adopting the viewpoint of the app developer. The experiment shows that an app developer does not have to worry about the actual distance between user and phone, but simply has to place the user in a virtual space, where the phone’s camera would be. Even if he wants to take the real space into account, the breadth of the ventriloquist effect height-wise would let him handle any vertical movement of the screen.

In conclusion, binaural technology for smartphones, despite initial perception problems, seems to be suitable for mass roll-out. A new technology, but one that uses simple and inexpensive hardware, a headset or pair of earphones that are already commercially available anywhere. As we said above, it is the perfect candidate for improving the mobile experience, beyond the visual, and far ahead of other external-sound solutions, adding speakers directly to the device (size-related constraints), or independent speaker systems (restricting mobility, although possible in specific static circumstances). Ultimately, not all binaural issues have been resolved. While it is conceptually viable, what about the benefits of this technology when it is introduced in the multiple everyday contexts of smartphone use? Does it really enrich the user experience? It is precisely this question that the author of this article is studying in his thesis, the results of which will be published soon.

To learn more :

[1] An essential classic on spatial perception of sound by human beings: Blauert, J. (1997). Spatial hearing: the psychophysics of human sound localization. MIT press.

[2] By the same author, a comprehensive assessment of binaural technology: Blauert, J. (Ed.). (2013). The technology of binaural listening. Springer Science & Business Media.

[3] Experiential evidence of the ventriloquist effect: Bertelson, P., & Radeau, M. (1981). Cross-modal bias and perceptual fusion with auditory-visual spatial discordance. Perception & psychophysics, 29(6), 578-584.

[4] Our publication on the experiment: Moreira, J., Gros, L., Nicol, R., & Viaud-Delmon, I. (2018, October). Spatial Auditory-Visual Integration: The Case of Binaural Sound on a Smartphone. In Audio Engineering Society Convention 145. Audio Engineering Society.

[5] This measurement protocol is being used for the first time here: Lewald, J., Ehrenstein, W. H., & Guski, R. (2001). Spatio-temporal constraints for auditory–visual integration. Behavioural brain research, 121(1-2), 69-79.

ntegration. Behavioural brain research, 121(1-2), 69-79.