1. Why SmartGrids will not be “Intelligent Networks”!

Using the seemingly synonymous phrase “Intelligent Network” in lieu of SmartGrids would ring a bell with anyone minimally versed in historical telecommunications culture. INs, as the acronym went, incarnated well into the 1990s a wide-scale, concerted and ultimately forlorn attempt to perpetuate the centralized pre-internet telecommunications architecture. Driven by incumbent telcos and their equipment manufacturers, INs undertook to enrich and extend the repertoire of telecom services while avoiding to upend the legacy architecture of the underlying networks, strictly confining the intelligence of these services within the telco-owned core of these networks. Any equipment connected to these networks had to remain a dumb “terminal” working in “slave” mode, with is configuration and features centrally controlled from the core of the Intelligent Network.

The architectural model of the Internet was, from the very start, radically at variance with the telco model. It was predicated on the so-called “end to end” principle, according to which applicative intelligence (on a par with what the telecommunications industry traditionally called services) should instead migrate from the core to the periphery of the network, i.e. to customer-premises equipment, devices and platforms. The network itself had to be as transparent and passive as possible, confining its role to connecting the devices which hosted the applications, whose configuration and control could be entirely transparent to the network. Upon the end of the IN era, a widely-echoed article could portend, half-provokingly, “the rise of the stupid network” as an escape from the Dark Ages of the Intelligent Network, by now destined for the waste heap of technological history…

On the current time scale of information technology, the era of these intelligent networks of yore may seem so remote as to deserve little more than a footnote. We have mentioned it here to draw a parallel between the recent evolution of ICT networks and the current evolution towards SmartGrids, centering on the key issue of decentralization and distribution of control. This historical parallel is enlightening, not only for preventing electrical operators from reiterating the same mistakes as pre-internet telcos by pointlessly attempting to counter a technological evolution that is likely as inescapable as biological evolution [KEL 10]. Through this endogenous and self-perpetuating process, Internet did eventually win the evolutionary competition because it is more efficient, more robust and above all more scalable. Now at play for the electrical grid is a similar evolution that we attempt to elaborate on in the rest of this chapter.

Smart Home Grid :

The most natural environment for assessing new possibilities for distributed management of electricity (and energy in general) is where Internet-centric ICT is already the most widely deployed, namely in the home environment.

We propose to call « Smart Home Grid » the coming together of the Home Area Network as we know it with the home electricity grid comprising not only regular household appliances viewed as electrical loads (“white” and “brown” goods), but also energy generation and storage equipment available within a house, if any. One of our research papers describes how the HAN itself might be extended to integrate all these pices of elctrical equipments, encompassing even those which do not have a network connection. Exploiting the availability of general information on the house as a smart space (e.g. the presence or activities of the occupants of the house), the integrated and comprehensive management of all energy equipment in the house makes for a level of local optimization which goes beyond global optimization under grid-originating constraints, such as load shedding for peak consumption shaving. This optimization may first correspond to an exploitation of pure unexploited energy efficiency resources existing within a house, understanding efficiency in a strict sense as the ratio between the output service and the input energy . These intrinsic efficiency gains may result from e.g. turning off or adjusting the heating, lighting or the stand-by state of domestic appliances by taking into account the presence or activity of the occupants of the house. It does not replace conventional efficiency measures (such as weatherizing the house) but complements them by requiring much less upfront investment, especially if the corresponding infrastructure is shared as we have suggested here.

In the longer term, expecting the generalization of distributed generation and a general evolution of the grid towards decentralization and asynchronous decoupling, local optimization will primarily address the use of loads in relation to the availability of power from local sources (typically from renewable energy) or energy stored locally, the use of grid sources being viewed as a secondary possibility; when these local resources aren’t available, weighing both alternatives according to the price or carbon footprint of remote energy.

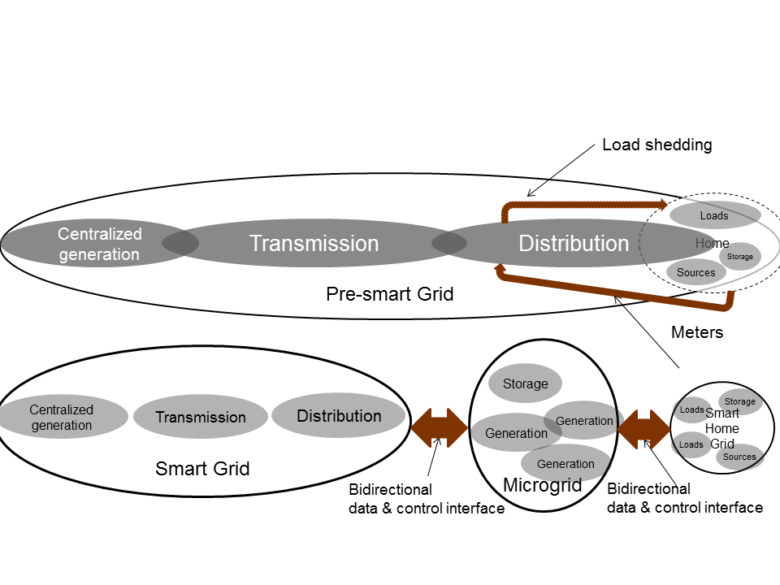

The diagram below illustrates in a very simplified view of the alternative between global optimization, typically limited to demand management, and local optimization exploiting a full-fledged Smart Home Grid. In the first case, the biggest loads in a home can be controlled directly by the distribution operator for demand shaping by load shedding[3]. This does in fact amount to extending the distribution grid (or at least its centralized “top-down “management) inside the home, to this piece of equipment. In the second case, we have by contrast an autonomous level of control for the home and a clear separation between the distribution grid and the Smart Home Grid, with an interface which can be used to exchange aggregate information required for interoperability between the Smart Home Grid and the global grid, yet hiding from the grid information that is purely local to the house and leaving at this level the choice of how to pass on the constraints arriving from the upper level. This last point is consistent with the principle of “separation of concerns”, universally adopted in the design of large-scale information systems, as it is essential to the scalability and robustness of these systems.

Separation of concerns & control autonomy for “Smart Home Grid”

Obviously local optimization and global optimization are not exclusive, and the management system for the Smart Home Grid can and should take into account the global optimization constraints originating from the distribution grid together with its own local constraints. In this way, the local home energy management system brings to bear all the local information it has to adapt to these constraints in a way that is best for the home user: thus, if a request for load shedding is transmitted from the grid down to the interface of the home energy management system, it can be applied to the device for which this has the least impact according to the present local context (e.g. activities taking place inside the home), and its application adjusted according to available storage resources, whereas a centralized system would directly shed the equipment that it controls with a systematic and context-blind policy.

From the home to microgrids, towards the autonomous control of subnetworks

Starting from the Smart Home Grid defined as a semi-autonomous subnetwork at house level, we can define similar subnetworks on larger, possibly nested, scales, like buildings, districts or cities. Some degree of control autonomy for such microgrids may already exist for some non-residential buildings, and to a lesser extents for districts. It is mandatory for electrical grids such as those of islands, or in developing countries where the electrical grid is not fully connected. The phrase “microgrid” implying at least partial control autonomy and minimum local balancing between loads, sources and storage is especially used for districts, and it has already been widely studied, even if mostly deployed in pilot projects so far.

The decentralization of control afforded by these different solutions will probably spread only very gradually, in a way similar to how it took place for telecommunication networks. The decentralization process would thus operate itself in a … decentralized way, starting from small peripheral subnetworks for which safety issues are not currently perceived as too critical, and migrating from there to the core.

Control models could themselves evolve from partial centralization, with a single controller managing each of these intermediary levels, towards more radical solutions using multi-agent systems, where each entity connected to the network is represented by a software agent acting as a peer in the global optimization process by exchanging its own constraints with other agents, all arriving at a solution satisfying (generally sub-optimally) all of the constraints without any central controller. These solutions, which have been widely studied in research projects, are the ultimate in decentralized control. In an even more long term and radical vision, the exchanges between agents would not be limited to mutual optimization constraints, but would serve as support for auctions for quotas of electrical energy that would be bought or sold by software agents acting on behalf of loads or sources, respectively. The granularity of these auctions might be temporally as fine as the fluctuation of conditions on both sides would warrant, with a limit possibly placed by the reluctance of human agents to relinquish such total control of their energy budget to software agents, rather than to a utility…

2. Interoperability and connectivity, when the grid is a inspiration for information technologies

By a strangely paradoxical twist of technological history, at the same time when information technologies claim to revolutionize the electrical grid, they do, under their most recent trend ; utility computing , borrow their inspiration from the “pre-smart” electrical grid, that relic from the 20th century…

Without delving here into the differences between “grid computing”, “cloud computing” and “utility computing[4]”, the commonality between these trends is the availability of information processing capacity which is as transparent and universal as the availability of electric power via the good old electrical grid: just plug into the information grid like you plug an appliance into a wall socket, and it works… This idea implies, among other things, the availability of an informational connectivity interface that should be as simple and universal as that of an electrical outlet, making it possible to tap into remote computing and storage resources as electrical equipment taps into remotely generated electrical energy

But if connectivity was indeed simple and universal for the traditional grid[5], it becomes much less obvious for SmartGrids, precisely because they inherit the multiple layers of connectivity inherent in data networks, and the ensuing difficulty of being compatible and interoperable at all these levels. We attempt to explain how the connectivity of SmartGrids involves much more than simply connecting two electrical wires, and how the notions of connectivity inherited from complex information system models must now be brought to bear.

Avatars of connectivity, when moving up from the physical layer to information models

It is interesting to start from the example of the Smart Home Grid described in the previous section to illustrate what the notion of connectivity can imply when transitioning to SmartGrids.

The connection to the Smart Home Grid of a device, for example a household appliance, will result in this device being “recognized”, that is to say individually identified and represented as such in an information system, in order to at least to be able to provide an interface which will allow a software application to monitor and control it via the data network which is an integral part of the Smart Home Grid. This “plug and play” mechanism has become quite commonplace in information technology, generalizing from the connection of computer peripherals to the connection of devices to a network. Different protocols normally ensure the automaticity of this recognition of new equipment by data networks, but numerous difficulties arise in practice due to the heterogeneity and diversity of the equipment and the networks, the non-universality of the protocols and the superimposed protocol layers which are involved in this connectivity, all the layers having to inter-operate at their level so that connectivity is ensured. High-level infrastructures such as DPWS or UPnP are supposed to take care of heterogeneous lower levels, but can in fact only recognize equipment that they already know.

Without going into the detail of these protocols and infrastructures, we can roughly differentiate between the lower levels of interoperability for which the correspondence is syntactic in nature, that is to say it is based on the exact recognition of sequences conformant to the syntax[6] of a standard protocol. Higher levels of interoperability are, by contrast, of a different nature, requiring correspondence between matching concepts on a semantic level by incorporating the formal meaning attached to syntactic units mapped from the lower levels. To give an example that will make these very abstract notions more concrete, the connection, ensured by a “plug and play” mechanism of a printer to a “Smart Home Grid” and a computer network which includes the informational layer, can extend to the exchange of information on a semantic level defining this equipment as a printer, with the characteristics and specific functions that it provides. Even if these characteristics were not provided by the printer exactly with the same syntax as the network expects, correspondence on a semantic level can eventually be made with the information expected by the network, as long as a number of common concepts are shared, or are reconcilable by semantic approximation if they are not shared directly. The semantic interoperability is therefore a means of resolving “top down” a syntactic mismatch at a lower level[7]. An energy management application will itself be able to have a shared ontology[8] of the general knowledge associated with the concept of printer defined at this level of semantic generalization (independently from a specific commercial model, but with potential variations corresponding to a laser printer, inkjet, etc.) in order to know what it is possible or forbidden to do with a printer (for example do not put it in standby or turn it off while it is expediting a print job, except in an emergency).

As abstract and remote from electricity as these notions may seem, the community of electrical engineers has already warranted their importance by standardizing a Common Information Model[9] which is a semantic definition[10] of equipment which can be connected to the electrical distribution and transmission grids, to ensure connectivity up to the highest levels as defined here, which effectively becomes relevant for SmartGrids.

3. Forsaking the clock

The third aspect of the informational transformation of electrical grids is the least commonly perceived and certainly the most deeply disruptive in the long-term. It is very insightful to draw once again upon the analogy with pre-internet telecommunication networks, which, like traditional electrical grids, were essentially synchronous, compared to the dominantly asynchronous model of post-internet networks.[11]This synchronism/asynchronism duality takes very different meanings and applies in a very different way depending on the levels at which it refers. We explain it here, starting from how it applies to data networks in order to show how it could apply to SmartGrids.

Synchronisms

A key distinction has to be recalled here between absolute synchronism, which refers to the alignment of separate processes, or sub-sets of a system, on a common clock providing a shared time reference, and relative synchronism, which refers to the need for separate processes to have a “temporal rendezvous” in order to work together, typically for exchanging data. The first type of synchronism is rather characteristic of the lowest layers of data networks or electronic circuits, even if asynchronous (that is to say devoid of a shared clock) alternatives can also be used at these levels, whereas relative synchronism may often instead apply to upper layers. To illustrate both notions at the application level, legacy telecommunication applications such as telephone voice calls and television are synchronous, in the relative sense for the first and absolute for the second, whereas typical internet-era applications which have come to displace these two, respectively messaging and video on demand, are by contrast asynchronous in matching senses.

Leaving aside the upheaval of long-lived habits that these transformations entail for users, the generalization of asynchronism has had economic and technical implications which have been instrumental in accelerating the migration of legacy telecommunications infrastructures to the internet: synchronism is generally more efficient and allows a better use of infrastructure because it requires provisioning it for the average rather than for the worst case. The combination of different levels of asynchronism (e.g. at both the IP layer and the application layer), has a cumulative effect on this efficiency advantage. To take an even simpler example at the application layer, a synchronous application such as a telephone voice call on a legacy circuit-switched network requires the end to end reservation of resources (the “circuits[12]”) for the whole duration of the call. The bandwidth of the network must be proportional to the number of subscribers to the network weighted by their likelihood of placing calls simultaneously (corresponding to the peak load). A new call will simply be rejected if all of the available circuit bandwidth is used. An asynchronous messaging application can by contrast average out these peaks statistically by spreading the transmission over time, and a message will be delayed, rather than rejected, if the network is saturated. The advantages of asynchronism in the lower layers exist even for a synchronous application such as telephony, if it uses an asynchronous IP network (which is the default case today since circuit switching has all but disappeared): for a regular voice call which comprises numerous blanks during which information need be transmitted, an asynchronous network can statistically multiplex several conversations sharing a bandwidth which will be less than the sum total of circuit bandwidths that would have been reserved in a synchronous network.

From asynchronous data to asynchronous electricity

The synchronism (in the absolute sense) of microelectronic circuits and lower layers of data networks has a direct analogue for electrical grids, which is the frequency synchronization of AC circuits. In both cases the need to maintain this synchronization imposes constraints which are often difficult to meet. The benefits of asynchronous solutions are not however decisive enough at this level for the lower layers of data networks or microelectronic circuits to have adopted them. As for electrical circuits, the historical advantages of AC compared to DC are not apparently as universal and decisive as they were at the time of the “war of currents”, so that mixed solutions are widely envisioned, and not only at scales where the better transmission efficiency of DC lines is crucial.

An intriguingly radical solution has been proposed [ABE 11] for an electrical grid architecture to generalize asynchronism at this level. This solution relies on dividing up the network into temporally decoupled cells between which frequency synchronization is no longer required, since the electrical interfaces between these cells systematically uses an AC-DC-AC conversion. Contrary to what is the case for information networks, it is difficult to have asynchronous operation at higher levels without this low-level decoupling, thus the main justification for this solution seems to be in supporting asynchronism at higher levels and decentralization of control at the same scale.

From data packets to energy packets

At the level of energy exchange proper, we are concerned with relative rather than absolute asynchronism, and the electrical grid is still massively and heavily synchronous, since it requires at any time an exact balancing between sources and loads, between generation and consumption, an equilibrium set by the mechanisms of impedance fluctuation, the failure of which can cascade into a blackout.

The costs imposed by this constraint come from, as is the case for traditional telecommunications networks, the required provisioning of both transmission and generation capabilities corresponding to the highest peak in aggregate demand.

The solutions which have long been used to mitigate the costs imposed by this constraint consist in partially “managing” demand (selective shifting or shedding of loads) and in having reserve generation capabilities used exclusively to meet peak demand requirements. Among these, centralized reserve storage (so-called “grid energy storage”) such as pumped-storage hydroelectric plants[13], make it possible to use excess energy from off-peak hours, itself linked to the availability of non-variable-capacity and low-marginal-cost generation facilities). These solutions remain within the bounds of a globally synchronous and centralized grid. Generalized distributed storage of electricity would be, if it were possible, the only means to temporally decouple generation and consumption, achieving true asynchronism : grid energy storage is a very partial and imperfect substitute, not only for the transmission loss that its centralization entails.

Taking inspiration again from the evolution of data networks and pushing to this level the desynchronization-decentralization model previously mentioned, it can be thought, as already proposed in the research literature, of a radical solution where decoupled cells at the physical level would also be desynchronized in terms of generation and consumption by the generalization of massive distributed storage capacity within each of the cells. Electricity would no longer be transmitted as a unidirectional synchronous flow from source to load, like continuous-time signals transmitted on traditional telecommunication networks. It would be sliced into quotas transmitted as energy packets from one cell to another, bi-directionally and asynchronously. In this (very long term…) vision each cell would be equipped with energy routing capabilities inspired by the routing of the IP packets on the internet networks, each packet thus being tagged with its destination address could be routed through several cells from its origin to its destination. The granularity of the proposed cells is potentially very variable, and they could range from the size of a country to that of a house, with a possible nesting of several scales of cells within one another. It is clear that for very large cell sizes such as a country this vision is not a huge departure from the already existing loose coupling of grids between neighboring countries. The really radical innovation would reside in generalizing fine-grain cells, which would be associated naturally with the evolution described earlier towards decentralization, each of these cells being endowed with a measure of autonomous control. In the distributed multi-agent-based model previously mentioned, agents controlling each of these cells would automatically negotiate in real time the transfer of energy quotas in order to satisfy their instantaneous local balance between capacities of generation, storage and consumption. This massive decentralization model would allow, at the cost of no less massive distributed storage capacities, a reduction in peak generation capacity by relaxing the worst case provisioning constraint. But its most decisive benefits are in providing better robustness against the risk of cascading failures and supporting the generalization of inherently distributed renewable generation capabilities.

Back to article body and conclusion

[1] A systemic disruption corresponds to a multipronged revolution involving a set of interrelated technologies whose effects potentiate each other, in a sense close to what Schumpeter called an innovation cluster, well before disruptive innovation became, after Clayton Christensen, one of the most hackneyed phrases in management lore

[2] Oft-mentioned examples, like online music or e-books, involve well-defined markets and single technologies (piggybacking on a previous disruption like the Internet in both these cases) rather than an entire technological system that involves multiple technologies and market relationships

[3] Millions of domestic water heaters in France were thus, well before SmartGrids became a buzzword, remotely controlled by the operator, using analog powerline communication technology.

[4] See « The Big Switch » by Nick Carr (listed under « further reading ») for a wide historical perspective which draws a parallel between the epoch-making progress opened up by the general availability of the electrical grid from “utilities”, and the progress and risks to be expected from “utility computing”, a perspective which can be prolonged through the paradox mentioned earlier.

[5] leaving aside the adjustments in voltage, frequency, physical size and shape of the plugs which are necessary when moving from one country to another, (adaptations which remain very simple to understand, even for non-technically minded people) the interface was effectively universal, at least within a country or an area such as Europe.

[6] The syntax in question is a set of rules defining a correct sequence of signs (symbols of an alphabet) as it conforms to a protocol, which is in fact a simple case of formal language

[7] It assumes however, that the coding and the representation of semantic information are themselves shared, which is generally the case today with XML-based representations.

[8] An ontology includes, in information sciences, the formal definition of all basic semantic entities of a domain (here the technical domain of equipment connected to the electrical grid) and their inter-relations and relevant characteristic properties

[9] Common Information Model (CIM) defined in the standards IEC 61970-301 for transmission and IEC 61968-11 for distribution

[10]Formally an ontology defined in UML, or RDF/XML

[11] Referring to the dominant model in each networking paradigm, this statement is not contradictory with the existence of synchronous layers (in the absolute sense like SONET or relative like TCP) in internet-era networks and asynchronous layers such as ATM in the pre-internet networks

[12] Note (for non-telecommunications-specialists) that even for legacy circuit-switched telecommunications, these “circuits” had already for a long time been virtual and did not correspond to a physically-connected end to end circuit, even if it did involve the reservation of resources for the entire duration over which the circuit was kept “open”, regardless of the data which was actually transmitted (blanks in conversations were not exploited to save bandwidth). This did also in principle justify the duration-based pricing which was also characteristic of old-school telecommunications

[13] These are reversible hydraulic generators operating over two paired dams and allowing, with an excellent efficiency (in the range of 70-80%) to store energy in the form of potential energy from the water rising to the upper reservoir from the lower reservoir

[14] www.future-internet.eu

[15] The general public did not have an urgent need for the Web or peer to peer networks before 1994, when no one had any idea that all this was even possible!

[16] Placing thus a quantitative tally on the qualitative difference between a joule of low-temperature heat and a joule of electricity