Summary

“Fog Computing” is generating growing interest in the Cloud Computing sector. In 2011, Cisco provided one of its first definitions: “Fog Computing is a highly virtualised platform that provides compute, storage, and networking services between end devices and traditional Cloud Computing data centres,” The metaphor is built on the image “of fog that is a cloud close to the ground”.

The Internet of things is presented as the main motivation for “Fog Computing”. The expected benefits of Fog Computing are improved latencies, higher quality user experience, and less traffic at the hearts of networks, but also higher security (localisation of sensitive data, larger attack surface) and resilience (no single points of failure).

Orange’s researchers are investigating these topics in the framework of a partnership, namely with the Inria (the French national institute for computer science and automation) around the Discovery initiative (an action of the Orange-Inria shared laboratory <IOLab>), which aims to design a distributed resource management system better able to take the geographic dispersion of “devices” (users’ mobile terminals, IoT sensors/actuators, etc.) into account.

Full Article

Cisco Fog Computing = Cloud + IoT

Fog Computing arouses a growing interest in the Cloud Computing and Internet of Things (IoT) arenas. Many articles commenting vision from Cisco, including one from the Wall Street Journal that is often considered as a primary source, have given birth to numerous online discussions and debates. Scientific events (e.g. FOG, ManFog) are also appearing in the research community.

The original vision from Cisco has been revealed in a keynote speech in an international symposium in 2011, and further developed in conference articles from Cisco research.

The metaphor comes from the fact that “fog is the cloud close to the ground”.

Many applications benefit from “traditional cloud” with its associated economy of scale due to massive equipment aggregation in mega data centres. This is not the case for many other applications however — such as applications that require low and predictable latency, mobility and location-awareness, which have a wide-spread geographical distribution, with a predominant wireless access and variable connectivity.

IoT applications exhibit these characteristics – hence the fact that IoT is presented by Cisco as the main business driver for Fog Computing with connected vehicles, smart grid, wireless sensors and actuators networks as typical applications.

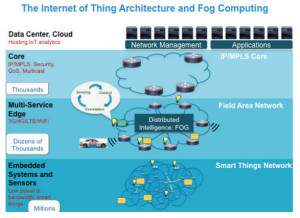

The reference architecture proposed by Cisco (see Figure above) illustrates this predominant role of IoT in the development of Fog Computing. The Fog layer is responsible for collecting data generated by sensors and devices, processing locally that data and then issuing control commands to actuators. The Fog layer can also filter, aggregate, and send that data up in the network, possibly to a remote data centre, for data batch processing and analysis purposes (Big Data).

“Fog could take a burden off the network. As 50 billion objects become connected worldwide by 2020, it will not make sense to handle everything in the cloud. Distributed apps and edge-computing devices need distributed resources. Fog brings computation to the data. Low-power devices, close to the edge of the network, can deliver real-time response” explains Rodolfo Milito from Cisco.

Other geo-distributed cloud initiatives

NTT develops an Edge Computing concept “which can reduce the cloud application response time by factor of 100 at most, demonstrated in its first research outcome, the Edge accelerated Web platform prototype”. The idea, very similar to that of Cisco, is to locate small servers in the vicinity of users and devices so as to avoid sending the entire load to remote data centres. NTT details some applications that can benefit most from the vision: smart city/building, M2M, medical, gaming, speech/image recognition.

AT&T Labs Research develops a vision of distributed cloud called Cloud 2.0 that resembles Fog Computing: “Given personal devices’ growing computation capabilities, researchers have proposed new hybrid architectures aimed at balancing local computations versus remote offloading towards more flexible solutions. Cloud 2.0 elaborates on this principle by extending traditional cloud capabilities to promote local computation when possible”. The main idea is to favour local storage and computation capabilities provided by a device or a federation of devices (e.g. a combination of mobile devices, set-top boxes, PCs and laptops) via inter-device cooperation, pretty much in the line of the “Device-to-Device” (D2D) where users devices are not just network end points but parts of the network with communication (forwarding) capabilities — while keeping remote storage and computations in traditional cloud for those applications that cannot rely on local resources only, and/or storage and computations that are relevant and accessed by distant users/devices.

Intel is working on Cloud Computing at the Edge that targets the distribution of cloud data storage and processing inside Radio Access Network (RAN) elements. They currently have trials going on with several companies. Saguna Networks, for instance, is optimizing content delivery by pushing content caching in the base station, small cell or aggregator.

IBM and Nokia Siemens Networks have announced a collaboration to build a similar initiative…

What Orange Labs is doing

Main benefits expected from Fog Computing are improved latencies and better Quality of Experience for users, less traffic in core networks. Some other expected benefits include greater security (localization of sensitive data, larger surface of attacks) and resilience (no single points of failure), easier scalability (it is easier to add a mini data centre at the edge than building a brand new mega data centre). These arguments are appealing for telecom operators which have to face an explosion of traffic and which are often blamed by users for poor Quality of Experience.

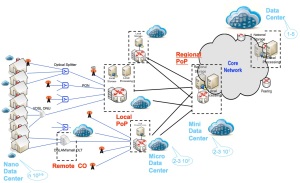

Another opportunity put forward by Orange Labs research is for telecom operators to build in-network distributed cloud, i.e. distribute the cloud inside their network points of presence (PoP) in order to transform their network – seen a as continuum from large data centres to devices/sensors through newtork PoPs -into a distributed cloud platform that could be monetized to cloud service providers. This would give a really differentiating position to telecom operators in Cloud and IoT.

Orange Labs research is investigating these subjects in partnership with INRIA and other actors in the context of the Discovery open research initiative. Discovery aims at delivering a Locality-based Utility Computing infrastructure: “Instead of the current trend consisting of building larger and larger data centres in few strategic locations, the Discovery consortium proposes to leverage any network Point of Presence available through the Internet” (see Figure above with mini/micro data centres in future Orange networks).

More info

- Forget ‘the cloud’: ‘the Fog’ is Tech’s Future. Christopher Mims. The Wall Street Journal, 2014.

- Cloud and Fog Computing: Trade-offs and applications. Flavio Bonomi, Cisco. International Symposium of Computer Architecture, 2011.

- Fog Computing and Its Role in the Internet of Things. Flavio Bononi and al, Cisco. ACM SIGCOMM International Conference on Mobile Cloud Computing, 2012.

- Improving Web Sites Performance Using Edge Servers in Fog Computing Architecture. Jiang Zhu and al, Cisco. IEEE International Symposium on Service-Oriented System Engineering, 2013.

- Cisco Technology Radar Trends, chapter Fog Computing. Cisco, 2014.

- Announcing the “Edge computing” concept and the “Edge accelerated Web platform” prototype to improve response time of cloud. NTT Press Release, 2014.

- I’m Cloud 2.0, and I’m Not Just a Data Center. Emiliano Miluzzo, AT&T Labs Research. IEEE Internet Computing, 2014.

- Increasing Network ROI with Cloud Computing at the Edge. Intel Solution Brief, 2014.

- Beyond the Clouds! An Open Research Initiative for a Fully Decentralized IaaS Manager.