Energy: blockchain is speeding up microgrids

The features of blockchain could be a lever for modernising the energy system.

Blockchain enables the distributed, secure, transparent recording and storage of energy and transaction flows. It can be used to track energy consumption in real time or to have knowledge of the asset portfolios of all participants. It also facilitates energy certification and auditing, as well as billing and paying prosumers thanks to the implementation of “smart contracts”.

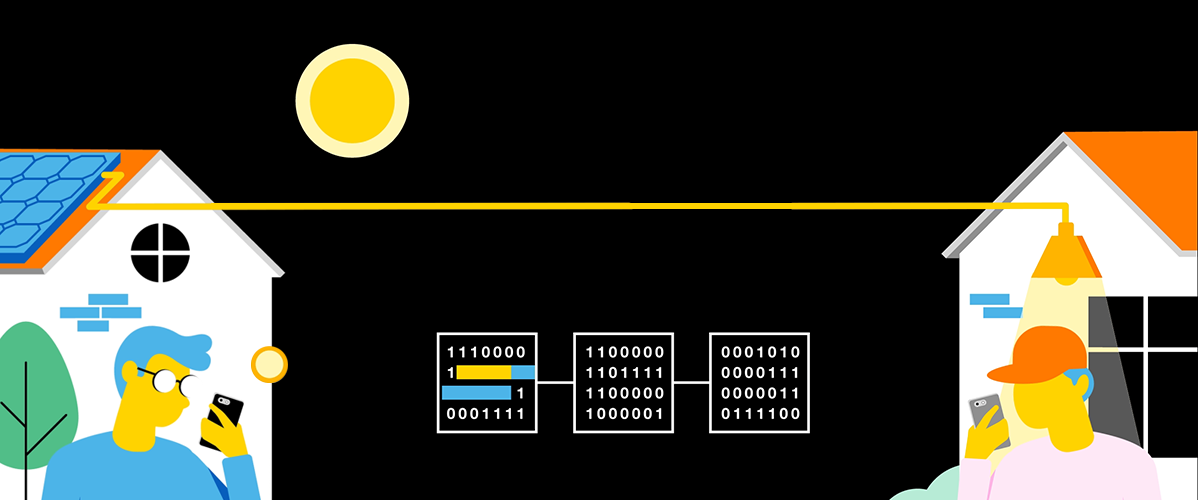

These elements make blockchain particularly well-adapted to the creation of decentralised systems, such as microgrids. These small-sized networks enable energy production, consumption and sharing among neighbours and can run autonomously without being connected to the distribution network.

In 2016 in New York, consulting firm Lo3 Energy and startup ConsenSys inaugurated the Brooklyn Microgrid, a decentralised community electricity system project in the Park Slope and Gowanus neighbourhoods.

This microgrid, built from the Ethereum platform, enables the tenants of a handful of buildings equipped with solar panels to track their production and exchange surplus electricity among themselves. Since then, dozens of experiments of this kind have emerged everywhere.

Cybersecurity: towards post-quantum encryption

The building of a quantum computer – that could happen in the next twenty years – represents a threat to cybersecurity.

Today, almost all digital communications are protected by three cryptosystems: public key encryption, digital signature, and key exchange.

These systems are implemented by asymmetrical cryptographic algorithms, such as RSA encryption or Diffie-Hellman key exchange, which are based on the computational hardness assumption, that is the hardness for traditional computers to solve certain mathematical problems.

Yet, these problems could easily be solved with a sufficiently powerful quantum computer.

The advent of this technology therefore imposes the replacement of current encryption methods by alternative “post-quantum” systems, capable of resisting the threats of a quantum computer and based on new mathematical problems.

This is being worked on by the NIST (National Institute of Standards and Technology, United States of America), who launched a global call for proposals in 2017 to develop, standardise and deploy new post-quantum cryptosystems.

Healthcare: deep learning is improving medical diagnosis

Artificial intelligence (AI) – and more specifically deep learning (DL) models – are revolutionising the world of medical imaging, which plays a crucial role in medical diagnosis.

In general, a radiologist examines images captured and writes a report summarising their findings, on the basis of which the referring physician makes a diagnosis and develops a treatment schedule.

However, the interpretation of medical images by a human is limited and can be extremely time-consuming. Today, DL algorithms are powerful enough to assist healthcare professionals in this task. The use of tools in the detection and characterisation of pathologies, in the quantification of the extent of disease, and as decision aids, is thus being developed in a number of fields.

In 2018, the Food and Drug Administration (FDA) authorised marketing of the first medical device to use AI for detecting diabetic retinopathy (a serious complication of diabetes that can cause blindness), without the need for a clinician to first interpret the image. Called IDx-DR, the software exploits a convolutional neural network (CNN) to analyse images of the eye taken with a retinal camera. If a positive result is detected, the patient is referred to an ophthalmologist for further assessment.

The FDA has also authorised commercial use of MammoScreen, digital mammogram interpretation software that is also based on CNN. Developed by French startup Therapixel, MammoScreen won first place in the DREAM Digital Mammography Challenge in 2017.

Industry: the new cobotics paradigm

Cobotics – human-robot collaboration – is developing progressively in industry where it shares the same objectives as “traditional” robotics (productivity gain, usability, safety) but has several advantages.

Unlike the traditional industrial robot, the cobot is designed to work side-by-side with humans without a safety cage. It can be programmed faster, more easily and with more flexibility to carry out a wide range of tasks.

Cobotics is also bringing about a paradigm shift: whereas automation translates as a transfer of skills from human to machine, cobotics involves a sharing of skills, the role of the machine being to assist rather than replace the human by, for example, increasing their capacity to manipulate heavy parts.

Founded in 2005 by three academics, Danish manufacturer Universal Robots dominates this still-emerging area, with 50 % of market shares. It claims to have sold 35,000 robotic arms since its creation.

American deep tech startup Covariant wishes to give robots a brain by developing a layer of AI that can be added to any robot, in any environment, enabling it to adapt to new tasks through a trial-and-error process based on deep reinforcement learning.

Computing: the neuromorphic revolution

Advances in AI and DL have inspired the development of new physical architectures adapted to specific applications, such as image recognition or speech processing. Although some of these, like the GPU (Graphic Processing Unit), have proved to be high-performing in AI tasks, there is still a problem: moving data from the memory to the processor and vice versa not only creates latencies (“von Neumann bottleneck”), but also consumes huge quantities of energy.

This is where neuromorphic chips come in as their concept is totally different. They mimic the structure and operation of the human brain, which uses the neurons and synapses it needs to perform calculations with maximum energy efficiency.

Like the human brain, they are less powerful than traditional processors and cannot perform a large number of operations simultaneously. However, as they are specialised in their task, they can carry out artificial neural network operations much faster and using much less energy, as well as doing so directly in the system in which they are embedded (so without relying on the Cloud). This could radically change the modern computing landscape.

The Loihi processor developed by Intel Labs contains 130,000 neurons and 130 million synapses. According to its founder, Loihi processes data up to 1,000 times faster and 10,000 times more efficiently than conventional processors.