• Originally focused on voice communications, telecoms have been a focus for acoustics research: coding, recognition, speech synthesis, etc.

• Today, sounds continue to provide many innovations: sound immersion for virtual reality; recognition of environmental sounds for natural spaces (detection of poaching or tree felling activities, species population measurements to assess biodiversity); recognition of environmental sounds for urban spaces (security) or the home (to detect when a person falls); and human-machine interactions where sound information complements or even supplements visual information.

Sound waves have multiple applications, which are not always well-known: they are used to communicate, but they can also be used to cool or heat a building, to monitor bird migrations or to control a television. As society changes, the field of acoustics is involved in many innovations that provide solutions to everyday challenges. The world of telecoms is no exception.

It now looks like we can introduce “mini golden ears” devices (with microphones and automatic sound recognition capacity) into various environments.

The place of acoustics in telecoms

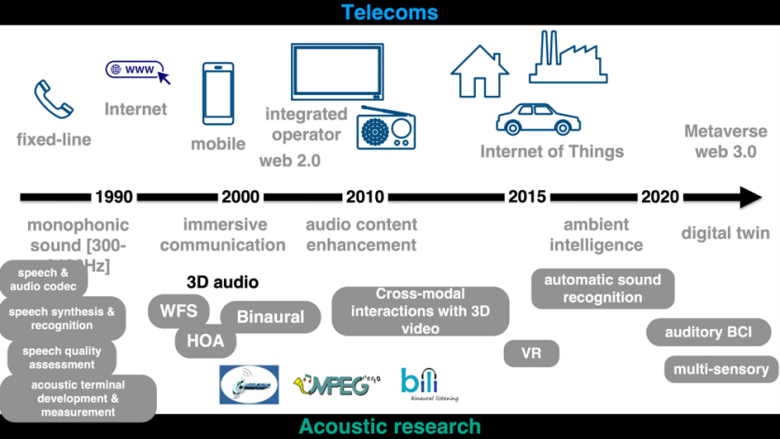

Telecommunications (or ICT for Information and Communication Technologies) has focused from the outset on voice communications. Acoustics have long been a key issue in research activities, including work on speech coding, speech synthesis and speech recognition. The diversification of communication technologies could have led to a decline in interest in acoustics issues. However this is not the case, quite the contrary. As technological developments evolve, researchers focus on new areas, resulting in a series of innovations (Figure 1): sound immersion, automatic sound recognition (which can be used for example for monitoring natural or urban environments and homes), sound-controlled brain-computer interfaces, etc.

A look back on several decades of an acoustic adventure at the service of ICT of the future…

Figure 1: History of transformations in the telecoms field, with the impact on acoustic research.

From sound in all its dimensions…

The 1990s saw the emergence of the concept of telepresence (namely, creating the illusion for participants of a video-conference that they are sharing the same space), and the first studies on immersive communication were launched. Sound spatialization was immediately identified as an essential component of immersion, but it requires revisiting the entire audio chain, from sound recording to reproduction, not to mention 3D audio codecs. These new technologies were tested in a real-world, large scale setting at the “Don Giovanni” live broadcast, in partnership with the Opéra de Rennes. The audience loved it, and the event made waves, whether through the broadcast on the Place de la Mairie of Rennes or on tablets, enabling a large number of people to enjoy the performance (in hospitals, prisons, etc.).

Alongside other players, including the Fraunhofer Institute, Orange was involved in the development of the MPEG-H Audio 3D format, enabling the broadcast of immersive audio content and offering great flexibility in the area of 3D audio formats and reproduction systems (5.1 multichannel devices, headphones, etc.). Today, an extension for virtual reality is being built. More recently, as part of another standardization body (3GPP), the IVAS (Immersive Voice and Audio Services) codec introduced immersion in voice communication services.

In the 2000s, the success of smartphones democratized the use of headphones. Sound immersion then became binaural (Figure 2) and Orange contributed to the “BiLi” (Binaural Listening) project launched by Radio France and France Télévisions for the development of sound immersion on headphones for the general public.

Figure 2: System for measuring binaural transfer functions in the anechoic chamber at Orange’s site in Lannion

… to the golden ear

The film Le Chant du Loup (Antonin Baudry, 2019) immerses the viewer in the daily life of a “golden ear,” a submariner expert in underwater noises, able to identify them (animals, earthquakes, fishing activities, submarines, etc.).

He literally monitors the dark ocean with his ears.

It now looks like we can introduce “mini golden ears” devices (with microphones and automatic sound recognition capabilities) into various environments. This approach is already widely used in the field of bioacoustics for the monitoring of natural areas, for example to combat deforestation and poaching or to assess the presence of species within a biotope.

With the revolution of the Internet of Things (IoT) and connected environments (smart environments, smart buildings, etc.) any object can be equipped with sensors and analysis capabilities and can communicate. Where the sensors are microphones, sound analysis makes it possible to detect events (for example, a person falling) or to identify a situation in order to trigger actions. In the healthcare world, OSO-AI offers support to caregivers for remote patient monitoring on this principle. Sounds can also be used in the predictive maintenance of machines in a factory. Everyone knows how a subtle variation in the hum of a car is the sign of a worn part or a future breakdown.

To control by thoughts …

Connected machines and environments can be controlled using voice assistants. This form of interaction is appealing because it is very intuitive. It is possible to go even further with control by thought: this is the promise of brain computer interfaces (BCIs). Initial experiments used visual data, but audio offers certain advantages: simplicity of equipment (speakers or headphones), privacy (if headphones are used), and the potential for superior responsiveness and 3D audio. The principle is to present different sounds to the user, each sound being associated with a specific action (for example: turning on a lamp, changing the TV channel, etc.). The users concentrate their listening on the sound that corresponds to the action of their choice. The challenge is then to identify, in their brain activity, the sound they are focusing on.

In 2022, an initial study carried out at Orange demonstrated the feasibility of the auditory BCI concept. The next step is to optimize the design of the sounds used to maximize their detectability, while making them as pleasant as possible to listen to.

And that’s not all.

All these examples illustrate the richness and diversity of innovations enabled by acoustics. The future is equally rich in opportunities. While virtual reality currently offers an essentially visual experience, enriching it with other sensory modalities would offer the possibility of erasing the boundary between the real and virtual worlds. To this end, equipping virtual reality headsets with 3D sound is an essential first step, especially because sound naturally has great immersive power. In addition, from a cognitive point of view, auditory information can complement or even accentuate visual information. Benefits in terms of memorization and learning have been observed.

The machine learning revolution is also investing heavily in audio signal processing tools and results are already very promising (coding, noise reduction, etc.).

In the longer term, faced with the challenges of the climate crisis, thermo-acoustics, which exploits the interactions between acoustic waves and thermal waves, has shown great potential for solutions to cool or heat buildings and even produce electrical energy.

Glossary :

Acoustics is the scientific field that deals with sounds. It encompasses all phenomena related to their emission (speakers, musical instruments), their propagation (reverberation, insulating materials), their capture (microphones), their transmission and perception (hearing).

Telepresence is the ultimate goal of immersive communication: when you put two people or two groups of people into communication, you want to give them the illusion that they are sharing the same space, even though they are sometimes thousands of miles apart. The goal is to reproduce the natural physical conditions of a conversation. Telepresence is based on the most realistic possible reproduction of the image and voice of the speakers, including sound spatialization.

Audio 3D is the techniques used to create or reproduce sounds located in the space around the listener. Spatialized sounds can be played on a multi-speaker system or headphones.

Automatic sound recognition is an area close to automatic speech recognition that is used for voice assistants, with the difference being that automatic sound recognition relates to all sounds other than speech. It is based on algorithms that are now mostly developed by machine learning from neural networks.

Brain Computer Interfaces (BCIs) offer a new way of interacting with machines. They aim to control objects by translating the brain activity of the user into commands. In reactive BCIs using visual stimuli, the user is presented with flashing lights at different frequencies, each associated with a specific control. The users must focus on the light that corresponds to the control of their choice.

The thermo-acoustic effect relates to the conversion of heat into acoustic energy and vice-versa. This effect occurs under very specific conditions that, for example, are achieved in thermo-acoustic machines: the thermo-acoustic refrigerator that uses a sound wave in a cavity (resonator) containing a porous structure to extract heat from a fluid, or the thermo-acoustic motor capable of creating a sound wave from a heat source which in turn can be converted into electric current using a microphone.

Sources :

Rozenn Nicol and Jean-Yves Monfort. Acoustic research for telecoms: bridging the heritage to the future, Acta Acustica, Topical Issue – CFA 2022, 7, 2023.

Read more :

French Society of Acoustics (SFA)

Schuyler R. Quackenbush, Jürgen Herre. MPEG standards for compressed representation of immersive audio. Proceedings of the IEEE 109, 9 (2021), 1578–1589.

IVAS – taking 3GPP voice and audio services to a new immersive level

Focus OSO-AI: The augmented ear of caregivers

https://www.wavely.fr/applications/maintenance-industrielle/

The challenge: Brain-Computer Interfaces that work for everyone