“Self-trackers that aim by design to change behaviours and incorporate norms raise questions about the consequences of their ‘prescriptive impact’.”

Self-tracking through technical artefacts (notebooks, scales etc.) is a very old practise that is experiencing unprecedented growth with the proliferation of digital devices – connected watches or bracelets, applications for measuring physical activity, sleep etc. This growth of the self-tracker market can be seen as a more overarching phenomenon in the “well-being” sector, in which digital technologies have gradually become more important.

This article [4] aims to provide insight into the design of self-tracking devices, with a particular basis in human-computer interaction (HCI) literature, since it is mainly in this area that these principles have been developed, notably from psychological theories of motivation and behaviour change.

The most utilised theories in this field are the goal-setting theory, the persuasive technologies framework, and the transtheoretical model of behaviour change. Although there is no empirical evidence to support the idea that the systems on the market have been developed explicitly on the basis of these theories, many of these systems include features that fall within design principles derived from these theories. These principles will thus be illustrated via devices already on the market.

The goal-setting theory

Developed starting in the late 1960s by psychologists Locke and Latham [5], this theory focuses on the roles of objectives in activity and motivation, particularly in the context of work. It describes how individuals respond to different goals, particularly from the perspective of the impacts on their motivation and performance. According to this theory, goals drive performance, motivate individuals to pursue an activity, and direct their attention to relevant behaviours and outcomes. For a goal to be effective in terms of its motivational effects, it must be clear, precise and difficult, while being realistic. In addition, it must be accompanied by informative feedback in terms of achievement, support in achievement and the granting of rewards upon achievement. Finally, it needs to have been established with the participation of all the employees involved.

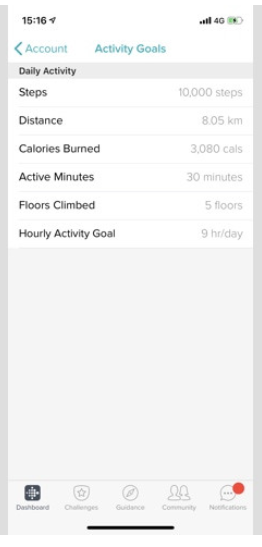

This theory has been widely used in HCI to design self-trackers, notably in the form of the ability to set goals and receive informative feedback on how to achieve them. These features are now ubiquitous in many of the devices on the market. For example, the Fitbit Charge 3 app provides the user with an interface in which goals can be set (Figure 1).

Figure 1 – Fitbit Charge 3 interface for setting physical activity goals (number of steps, distance travelled, stairs climbed, etc.).

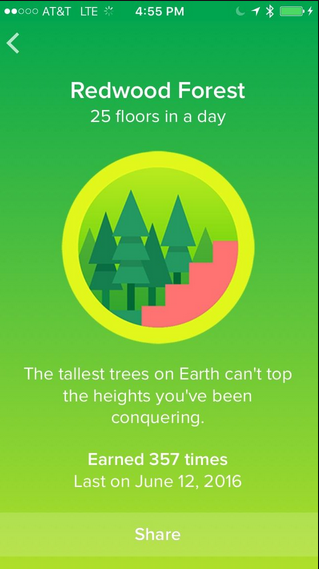

In these apps, goals can be set by default (for example, 10,000 steps or 8 hours of sleep) or by the user. Feedback on the achievement of goals is given to the user in different forms, for example, in numbers, graphs or through notifications (Figure 2). This feedback is based on an assessment of the discrepancies between goals and what has been achieved at a given time and may be accompanied by incentive messages to achieve goals or by rewards that are part of the gamification (Figure 2).

Figure 2 – Examples of feedback on the achievement of goals. Left: The Fitbit app uses colour coding and rings to indicate deviations from goals (green and complete ring = goals achieved; blue and partial ring = goals not yet achieved). Right: A “badge” (gamification) rewarding the user for having climbed 25 floors during the day.

These rewards embody the process of “positive reinforcement” developed within the framework of behaviourism.[1] It consists of increasing the likelihood that behaviour will be repeated, through the use of pleasant or rewarding “stimuli”.

The persuasive technologies framework

Persuasive technologies have been a highly active research topic for about fifteen years, focused on the design and use of computer devices as tools for behaviour change. In general, these technologies are defined as systems specifically designed to “change the attitudes and behaviors of their user” through persuasion and social influence.[6] A significant part of the work in this field thus aims to develop and study design principles that promote or facilitate these changes.

As such, four dimensions are considered fundamental in the design of persuasive devices. The first concerns support for the development of the target behaviour (or attitude) – for example, through reduction (making the effort required to engage the individual in the behaviour minimal), personalisation, self-monitoring or simulation (allowing the user to explore the links between cause and effect relating to these behaviours). The second dimension concerns human-machine dialogue; the goal is to ensure that the system provides the individual with informative feedback to help them progress towards their goals of behaviour change, for example through positive messages (congratulations, compliments, etc.), rewards, reminders or suggestions. The third dimension is the credibility of the system, which is considered essential to the success of the persuasion. It involves design principles that are, for example, expertise (the system must demonstrate expertise in the area in question); authority (it must refer to or cite competent, recognised authorities); or verifiability (the content or information must be verifiable). Finally, the fourth dimension concerns social influence. It consists of relying on the social environment to motivate individuals to transform their behaviour, for example, through social comparison, competition, cooperation, normative pressure or social recognition.

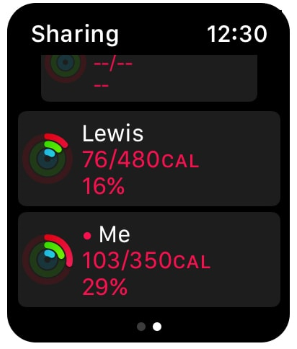

These principles incorporate the behaviour change techniques already mentioned above, including positive reinforcement based on rewards, or feedback on the achievement of goals. More generally, creators who subscribe to this persuasive approach draw on different psychological theories of persuasion, motivation or behaviour change. Many studies have involved this persuasive technologies framework, in particular those for designing systems to promote health and well-being. Even if they are not portrayed as “persuasive” systems by the companies that market them, this transformational aim for behaviour is reflected in some devices (Figure 3).

Figure 3 – Example of physical activity incentives and social comparison methods used in the Apple Watch. Left: A notification prompting the user to keep going to achieve their goal (“stand up and move a little”) and at the same time telling them that they are approaching it (“you’re getting closer to your Stand goal”). Right: Screen telling the user about the physical performance (number of calories burnt) of a “friend” (Lewis) who shares as they share their data with one another.

This persuasive approach has been widely criticised, particularly in terms of its social and ethical implications, for example, the fact that these systems can “impose” behavioural norms that are determined by designers. This the case, for example, with taking 10,000 steps per day. This objective has been the subject of much criticism, such as: it does not take into account the intensity of walking, is too high a standard, and it may even cause health problems for some people,[7] Other criticisms raised concern the risks of manipulation or the use by companies of the personal data collected by these systems. For example, a version of the Apple Watch app for the game Pokémon Go offers to count the number of steps taken, distance travelled or calories burnt in Pokémon hunts. At first glance, this use of Pokémon hunting can be interpreted as a source of physical activity to encourage, but one wonders whether it is also a subtle form of prompting or manipulation to play this game. Here, the designers’ intentions raise questions.

These problems have thus led to the development of ethical rules that aim to regulate the design of persuasive systems. These rules include respecting users’ privacy, making the goals of the designers public along with the methods of persuasion used, not designing systems for manipulation, taking into account the responsibility a designer has for all possible consequences of using a persuasive system.[8]

The transtheoretical model of behaviour change

Developed in the 1970s, this model describes the stages through which an individual passes in trying to change behaviours.[9] This model, which is known as transtheoretical because it incorporates principles and models from other psychotherapeutic theories of behaviour change, distinguishes seven stages, which are not linear but must be overcome one by one for a change to occur and endure: pre-contemplation, contemplation, preparation, action, maintenance, relapse, achievement.

To illustrate these stages, let us take the case of tobacco consumption. At the first stage, the individual has little or no awareness of a problem related to this habit and there is resistance to the idea of recognising the need to change (they do not consider smoking a problem and do not want to stop). As they move into contemplation, they begin to realise the problem and the benefit there may be in changing these habits. They are seriously thinking about change (quitting smoking) but are not yet ready to commit to this change. In the preparation phase, the individual forms an action plan (for example, consulting a doctor to stop smoking). Action is the phase in which the individual begins to introduce changes into their behaviours (for example, reducing tobacco consumption). Maintenance describes the stage at which the individual works to avoid returning to the stage preceding the change. Relapse is the phase that describes a return to previous stages. Achievement corresponds to a phase in which the individual adopts the intended behaviour permanently (cessation of tobacco consumption).

According to this model, the production of change involves ten “processes” that allow us to move from one stage to another, for example, awareness of the causes and consequences of behaviour and reassessment of the effects of continuing or stopping behaviour on the social environment; the establishment of “stimulus” to promote change or self-reinforcement. This model has been used by some creators to design or think about self-measurement systems in ways that assist in behavioural change, for example, motivating people to adopt more environmentally friendly behaviours. However, use of this model of behaviour change in design remains marginal, and it seems unlikely that it has been used in other design frameworks beyond that of research.

Conclusion

From the examination of the literature and devices on the market, it appears that the main theoretical sources used (explicitly or implicitly) in the design of self-trackers are psychological theories of motivation and behaviour change. While trackers were initially focused primarily on quantification, devices are emerging that increasingly incorporate behaviour change features. Thus, these devices increasingly support behaviour regulation (control and evaluation) operations on the basis of objectives determined by the designers or by the user. These devices thus involve a hybrid form of self-distributed regulation, since some regulation is “delegated” to these systems by design. Moreover, some companies like Fitbit even present these devices as electronic “coaches”. The company’s website reports “stories” describing how their products have helped people change their behaviour and, more broadly, their lives. These systems are no longer designed as mere quantification tools, but as true “agents” that support behaviour change. The use of artificial intelligence systems in some applications as “chatbots”, capable of creating a dialogue, take a step in this direction (see, for example, Lark, which offers an AI “personal health coach”[2]).

However, this strategy that consists of developing systems that include behavioural change techniques is debated, particularly in terms of the persuasive approach. It questions the role of designers, as well as the role of technologies in controlling user activities. One point of the debate is the fear that systems developed according to this approach prescribe or even impose social norms surreptitiously, these being behaviours that are determined by designers or private or even political entities. In general, it is essential that ethical and social issues like these be taken into account in the design of self-tracking services that include behaviour change techniques. This could include greater transparency among companies about the techniques and “norms” involved in self-trackers (for example, where do the norms come from? Who produced them? What techniques are used?). One must also consider the social implications of these systems’ “prescriptive impact” as they incorporate these techniques and norms. Finally, beyond the questions raised by the use of behaviour change techniques, there are also those concerning the use by companies of personal data that are collected by these systems (processing, storage, dissemination, etc.). Google’s recent acquisition of Fitbit has raised fears about how Google could use these data[3]. These fears point once again to the importance of the transparency of digital companies in what they do with the personal data they collect.

For further information

- [1] A branch of psychology that seeks to account for behaviours in terms of the relationships between “stimuli” and “responses”.

- [2] https://www.lark.com

- [3] In a recent press release, Google promises that it will protect users’ privacy and that users will have control over their data (https://blog.google/products/devices-services/fitbit-acquisition).

- [4] This article is based on work done as part of the QUANTISELF project – ANR project 16-CE 26-0009.

- [5] E. A. Locke and G. P. Latham, G. P., “Building a Practically Useful Theory of Goal Setting and Task Motivation: A 35-Year Odyssey,” American Psychological Review 57, no. 9 (2002): 705–17.

- [6] B. J. Fogg, Persuasive Technology: Using Computers to Change What We Think and Do (Morgan Kaufmann, 2002).

- [7] https://www.theguardian.com/lifeandstyle/2018/sep/03/watch-your-step-why-the-10000-daily-goalis-built-on-bad-science

- [8] D. Berdichevsky and E. Neuenschwander, “Toward an Ethics of Persuasive Technology”, Communications of the ACM 42, no. 5 (1999): 51–58.

- [9] J. O. Prochaska and W. F. Velicer, “The Transtheoretical Model of Health Behaviour Change”, American Journal of Health Promotion 12 (1997): 38–48.

- [10] M. Zouinar, “Théories et principes de conception des systèmes d’automesure numériques. De la quantification à la régulation distribuée de soi” (Theories and principles of digital automation system design, from quantification to self-distributed regulation), Réseaux 4, no. 216 (2019): pp. 83–117.