The growth of email use at work since the 1990s has resulted in a continual increase in the number of messages exchanged. Many studies on this increase have shown the adverse consequences on workers and their performance (“cognitive overload”, interruptions, fragmentation of work, or stress [1, 2]). To reduce these consequences, many technological and organizational avenues have been explored. Among the technological solutions suggested examples include systems for classification into different groups, automatic filtering (anti-spam filters), and prioritization of messages according to context (user activity, message subject, sender, etc. [3]).

In this vein, a system was developed as part of a research project. The main objective of this research prototype is to assist the user in his/her processing of incoming messages, taking advantage of the progress made in machine learning. This system was the subject of a use study as part of an experiment in a real working situation in a bank. The objective was to explore various questions: what help does the system really provide in processing incoming messages at work? Does it reduce the workload for users? Does it reduce interruptions? How do users appropriate the tool? Does it transform the way they use email? How do they react to a learning system, in terms of trust, for instance?

Description of the system

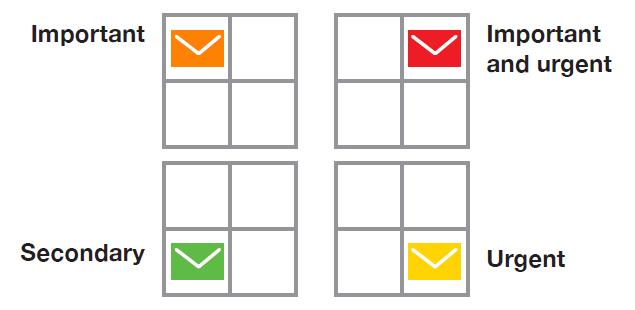

The system is a plug-in for the Outlook email client. Integrated in the toolbar of the inbox, its main function is to prioritize received emails based on their level of importance and urgency. It calculates a score based on three main types of data: the sender, the subject and the body of the email. The plug-in categorises messages in four categories with colour coding (Figure 1): “important” in orange, “important and urgent” in red, “urgent” in yellow and “secondary” in green. This categorisation was inspired by the “Eisenhower Matrix” [4], which classifies the tasks involved in an activity.

Figure 1. Categories used in the system Ml

To categorize the messages in a way that is appropriate for the user, Smart Mail creates a personalised learning model based on existing messages and email usage practices. One particularity of this system is that its learning can be supervised by the user. Thus, when the system is not “sure” how to categorize a message, it asks the user to confirm. The system also manages Outlook received message notifications. These are deactivated for messages classified as secondary. An important point is that all message processing is done locally, on the user’s PC. The data therefore remains private. The plug-in also offers other functions: creating a company vocabulary library with the aim of facilitating access to the information contained in the emails by enriching it; marking messages as “dealt with”; displaying all the emails not dealt with in the form of a matrix; sorting emails by time period; and sorting them by urgency and importance. Figure 2 presents all the functions that appear in the Outlook bar when the plug-in is installed.

To analyze use of this plug-in and its consequences on the handling of incoming messages, we conducted a qualitative study in two phases with a small sample of users (all managers: directors, project managers, technical experts, internal consultants, etc.) belonging to the IT department of a French bank, which wanted to test the prototype having discovered it at the Orange research exhibition.

The objective of the first phase, conducted just after the installation of the plug-in, was to better understand overall use of email, based on the profile and activity of the person concerned, and to gauge the first reactions to using the system.

The second phase (two months after startup) focused on the uses of the system. In-depth interviews were conducted with participants during each of these two phases.

Figure 2. All features displayed on the Outlook task bar

Appropriation of email categorisation

The first observation arising from analysis of this system use was the difficulty users experienced in identifying with the four categories used by the system to classify messages.

The two categories they had most often used were “important” and “secondary”. The “important” category encompassed all emails that required a reply, whereas the “secondary” category referred to emails that contained useful information but did not require a reply (e.g. information emails received after signing up for a mailing list or emails in which the recipient is only CC’d).

The distinctions between the “urgent”, “important” and “urgent and important” categories did not seem easy for users to understand or apply. They generated additional cognitive work, particularly in the long term:

“The difficulty is obtaining an even judgement over time. […] Importance is something you see straightaway. Urgency is something you always have trouble seeing. Is it really urgent? Do you just want to deal with it to get it out of the way?… You have to be consistent and methodical in the rule you apply. It’s a learning process, but you still have to be consistent. If you change your mind every three days, you will disrupt the machine’s learning…» (Director 1, interview 1).

The cognitive cost here is characterized by the need for the user to maintain consistency in his/ her assessments so as not to disturb the machine’s learning (having the same “rule” for dealing with urgency and “not dealing with an email quickly just to get rid of it”).

Two user profiles, depending on the volume of emails received

The analysis of usage practices showed two categories of users, chiefly based on the number of emails received. The first category comprises the senior managers present in our sample, who received a large number of emails (50 to 200 per day). For these users, the system managed to learn relatively quickly, classifying as “secondary” a large number of emails from mailing lists, which often contained advertising or information about events. These managers progressively developed trust in the plug-in’s categorizations:

“I’ll have to have another look to tell you everything, but generally the system was good at identifying what was of secondary importance for me… Overall, the categorization was good” (Director, interviews 1 and 2).

“The longer we go on, the more trust I have in it. You can see that it makes adjustments. I don’t have blind faith in it, but it is relevant” (Management committee member, interview 1).

The other managers, who received far fewer emails (less than 50 a day) and were more directly concerned by the emails received, tended to manage their emails as and when they came in. These managers were more skeptical of the system’s categorizations:

“In the end I didn’t really trust the tool […] I was wasting more time because I was always having to recategorize, as it kept marking emails I considered important in green […] It had the opposite effect to that intended: instead of saving me time, it made me waste time” (Project manager, interview 2).

This wariness was further fuelled by the opacity of the algorithm’s categorisation rules:

“I think the main thing is understanding how emails are classified, because if you don’t understand that, there is no point in the tool doing it” (Consultant 1, interview 1).

Some users wanted to be able to customize the system by adding a few rules and perhaps choosing a specific categorization.

Varying consequences on activity

The analysis of usage practices also reveals different types of effects, showing both the advantages and the potential disadvantages of the system, based on the way in which it is used.

- Reduction of interruptions in some cases, but a feeling of loss of control in others

One of the advantages of using the Smart Mail plug-in is the deactivation of received email notifications for emails deemed to be secondary. This function was intended to reduce the micro-interruptions secondary messages can generate.

On this point, the effects of using the plug-in on the work of the two user profiles identified are:

- For senior managers, a feeling that interruptions are being limited, that time is being saved and that they are discovering new, more effective ways of processing their emails and managing their time (e.g. handling of secondary emails by time slot);

“I’m better able to optimize my time, I’m more efficient, I deal with what is important […] I’ve been working on a time lag for several weeks” (Director, interview 1).

- For the other managers, a feeling of loss of control over received messages due to the deactivation of notifications, which is deemed to have an adverse effect on their work. In the interview extract below, a manager indicates the consequences of having missed a message that was classed as secondary:

“He called me and said ‘you didn’t reply. It’s too late now’”(Consultant 2, interview 1).

Consequently, these managers continue to deal with all messages as and when they come in and are gradually stopping using the plug-in.

- Extra work for the user

Another type of effect on working activities is the additional work generated for the user by the need to confirm the categories over time periods of various lengths according to the different types of emails received:

“I felt the benefit of not having pop-ups anymore… definitely, but I feel like at least part of that gain is being lost re-tagging things where necessary” (Management committee member, interview 2).

“I feel like when you start a new discussion thread, you will always have to interact to say ‘no, that is important’ or ‘that is secondary’, but I tend to barely do that anymore…” (Director, interview 1).

This consequence raises the question of the efficiency and duration of the system’s learning and the user’s role. Indeed, while it is absolutely pertinent to involve the user in the learning process, that involvement can quickly become counterproductive if he/she is constantly having to check the system’s categorisations.

Conclusion

This study provides interesting insights on the potential usefulness of machine learning in the management of emails.

First of all, usage and benefits of the system vary according to different factors such as the number of emails received (related to the type of user activity) or trust in the system. Secondly, the difficulties in understanding the rules used in the categorization is an obstacle to appropriation for some participants. A final observation concerns the occurrence of a tension between the possibility offered to the user to supervise the learning phase of the system (by validating the categories) and the effort required for this supervision. This effort may be experienced as too costly in the long run. Finally, among the categories used by the system, two of them proved to be really relevant for the users: e-mails requiring and not requiring an answer.

While these insights need to be confirmed by a second study based on a larger sample, an immediate area for improvement is to facilitate the appropriation of the system by offering users an “à la carte” personalization of categories adapted to their own needs and e-mail practices.

But beyond such immediate improvements, if the system seems to be promising for helping users coping with e-mail “overload”, its adoption in the longer term by a greater variety of users will necessarily involve taking into account, from the early stages of design, the consequences of its use on their activities.

[1] Whittaker S., Sidner C. (1996). E-mail overload: exploring personal information management of e-mail. Proceedings of the SIGCHI conference on Human factors in computing systems: common ground, pp. 276–283.

[2] Assadi, H. et Denis, J. (2005). Les usages de l’e-mail en entreprise : efficacité dans le travail ou surcharge informationnelle ? In E. Kessous, Le travail avec les technologies de l’information (p. 135-155). Paris: Hermes.

[3] Salembier, P. et Zouinar, M. (2017) Interruptions et TIC. De l’analyse des usages à la conception. In Alexandra Bidet, Caroline Datchary et Gérald Gaglio (Eds) : Quand travailler c’est s’organiser. La multi-activité à l’ère numérique. Presses des Mines

[4] Matrice Eisenhower : https://everlaab.com/matrice-eisenhower/