Rankings, awards, counters, menus, lists, recommendations and ratings are proliferating on web service interfaces. They direct web users’ attention, guide their browsing, sort and rank information, suggest friends, recommend content, and personalize the advertising to which they are exposed. While public discussion of big data is currently focused on the volume of data involved, the biggest challenge for this movement is our capacity to interpret these data, using computers that are able to give them meaning. It is therefore important to know to what extent the visible products of algorithmic filtering (counters, menus, lists, recommendations, buttons, etc.) are adapted to users’ behaviours and conceptions.

Two views of personalization

The growing prevalence of these calculation tools has provoked plenty of debate, with the threat of «algorithmic governmentality» (Antoinette Rouvroy, [1]) always looming large in discussions. The spectre of «Big Brother» is never far away, and comprises two risks: the risk of seeing web users’ behavior prescribed and determined by the algorithms of online platforms (ranking, suggestion, recommendation, etc.) and the risk of users losing possession of their personal data and traces. Those who subscribe to this view see web users being imprisoned to an ever greater extent in a world built by web algorithms: search engines, ad targeting, rating systems, imposed personalization and information filtering. Information filtering, which is particularly prevalent on social networks, is perceived to hinder users’ autonomy and reduce their critical faculties (see Eli Pariser, The filter bubble, 2011 [2]).

Two opposing views can be distinguished in the public debate. On one side are the players who produce web technologies who advocate personalization with a view to strengthening the quality and relevance of web services. On the other are those sounding alarm bells about the potential threats to privacy and the limited possibilities offered to individuals when interfaces purport to have a calculated knowledge of their desires and intentions. More and more accusations are being levelled against web giants. They combine economic criticism and fears about the management of personal data. Web users’ attitudes often fall between these two positions: for instance, the growing concerns about Facebook’s policy on personal data do not prevent the social network from clocking up over 31 million active users every month in France (source: Facebook, 2016).

Several aspects of the question of algorithmic guidance of web users are worthy of attention. The first is that, in reality, no one really knows what web users do, or do not do, with web metrics (number of views, likes, retweets, etc.) or how they influence their behaviour. The effects of algorithms are, more often than not, simply deduced from their designers’ intentions. Some critics foresee an Orwellian society, making sweeping generalizations based on what designers say they want their tools to do.

There are two problems with such generalizations. Firstly, they assume that algorithms actually do the kind of things their designers want them to do – an assertion disproved by the history of science and technology. Secondly, they conceive of users as naive and passive players whose behaviour is entirely programmable from platforms – a theory discredited by the sociology of practice. In reality, the prescriptive force of web metrics is much more complex, uncertain and diluted.

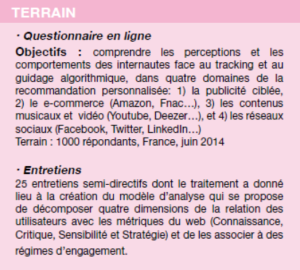

The aim of our survey was to document these digital usage practices: how do web users perceive algorithmic guidance and how does it fit in to their usage practices on various kinds of web platform?

Practical knowledge

The first significant result is that, overall, users have little knowledge of the platforms studied (Google, Amazon, Facebook, YouTube, Twitter, etc.) and level very few criticisms about the constrictive nature of these calculation toolsOnly 16% know that Facebook’s news feed is filtered based on the friends with whom the user interacts the most, and only 5% know that Google search results give first priority to websites linked to from other popular websites (PageRank).

It emerged from the interviews that they do not see platforms as guiding them or exerting undue influence, and that their main worry concerns the use of private data. The verdict on personalization algorithms is ambivalent, and varies from one sector to another: recommendation is deemed «intrusive», particularly in advertising (78%) and e-commerce (61%), but also in Facebook news feed filtering (59%). That said, web users are not necessarily averse to the principle of algorithmic personalization: 4 in 10 web users consider personalization of advertising to be «a good idea», while 6 in 10 say it is a good idea for e-commerce and music or video.

Users’ knowledge of algorithms therefore stems primarily from their usage practices. Web users have often experienced «failed» algorithmic personalization. These failures are of two kinds. In the advertising world, recommendation keeps making the same mistake, «constantly showing the same thing». This type of failure is identified by 80% of web users in relation to advertising, and 61% with regard to e-commerce. As far as content recommendation tools are concerned, lack of relevance is the main pitfall: around 4 in 10 respondents have experienced «content that doesn’t interest [them]” in their Facebook news feed, or in music/video recommendations (YouTube, Deezer, etc.).

Faced with tools that are difficult to understand, and often considered intrusive, web users do not remain passive, acting in different ways depending on the options sites offer them. These options are complete withdrawal (37% use ad blockers, 72% clear their browsing history); configuration (52% try to turn off recommendations, 38% delete some of them); or helping to make the content more relevant (29% provide information about their tastes and preferences, 34% evaluate products or content in order to improve the quality of the recommendations made). In short, the two ways in which algorithms miss their target – repetition and irrelevance – provoke two kinds of reactions from web users: avoidance and improvement. This is the second significant result of our survey: internet users do not submit entirely to the prescriptions of web algorithms. In practice, internet users demonstrate thoughtful attitudes towards calculated content and web metrics.

We have identified 5 types of links between internet users and algorithmic recommendation and guidance functions; five ways in which an algorithm and a web user can have a hold over one another:

- “NO HOLD”: the user ignores the algorithm, is indifferent to it, or operates outside of its framework. «When I post, it’s just for me. Facebook is like my memo book.»

- “HELD”: the user accepts the algorithm and allows him/herself to be guided by it without knowing anything about it, he/she takes the algorithm as it is. «I nearly always take the first, second or third result.»

- “HOLDING”: the user appropriates the algorithm, pays attention to it, has a practical knowledge of it, and embraces it. «I find Spotify’s algorithm better than Deezer’s.»

- “TAKEOVER”: the user takes control of the algorithm, is strategic, configures and explores it. «People log in to Facebook around 9am, 12 noon, 4pm and 6pm, so I post during those times.»

- “FREED”: the user breaks free of the algorithm, gets around it, avoids it. «I often use private browsing when I don’t want to leave any traces.».

The picture that emerges is one of pragmatic, opportunistic web users who make local use of knowledge and holds according to their needs and interests. And while the sense of algorithmic alienation generally spoken about in the press was mentioned by some users interviewed, it was always in the third person: «people who have power can easily use this kind of tool to manipulate, but I am careful», «people don’t really know, and it’s quite dangerous». In other worlds, algorithmic guidance is dangerous for others, but not for themselves.

Algorithm knowledge

The study highlights the decisive importance of «algorithm knowledge» to enable web users to be fully aware of the implications of the choices they take. While subjects such as personal data are often spoken about, giving rise to prevention and information campaigns, the topic of algorithmic guidance is still absent from the initiatives. Yet this subject appears to be a central topic in the development of a «digital culture», lying as it does at the heart of interactions between web platforms and users.

Our fieldwork revealed great disparities in interviewees’ knowledge of algorithmic guidance, which can often leave them feeling incompetent. Schools could therefore be an important battleground in the fight against such inequalities. The MOOC «Teaching and training with digital technology» launched in May 2014 by the French Ministry of Education for teachers and trainers, and the optional computer coding classes for French primary schools from the 2016-17 academic year are good examples of the digital dynamic that runs through education today. It seems to us that a programme to raise awareness of web algorithms, based not only on the technical aspects but also on the concept of guidance, could certainly have a place in such initiatives. Such a programme would not only help address web users’ relative naivety regarding digital systems, but also assist in user empowerment and the development of a digital culture. The right systems would then have to be built to support this approach and find a place for it in usage pathways.

At the same time, efforts would have to be made on the algorithmic tools themselves in order to build users’ algorithm knowledge and incorporate such knowledge in usage practices. Indeed, it would now seem necessary to design calculation tools that leave enough room and scope for users to configure them, so that they can more easily develop modes of engagement such as takeover, and avoid the alternative, «love it or leave it». It is also important, when designing services, to clarify for users which content is calculated and how these calculations work.

At an even earlier stage, from the very start of the design process, developers need to adopt the approach advocated (in another field) by Ann Cavoukian, whose concept of privacy by design suggested making protection of privacy an integral function of systems and not a problem considered after the fact, by which time it is difficult to integrate in usage practices. In the specific case of algorithmic guidance, that would mean defining concepts such as algorithm knowledge by design and freedom from guidance by design.