Summary

When using data in a digital service, one should usually trade-off between data usage (ease of operation, performance) and data security (user privacy, data confidentiality). This article presents the case of advanced cryptographic techniques that brings a solution that reconciles these two conflicting objectives. A lot of use cases are relevant in this context, and two of them that have been fully implemented:

- a multi-bank automatic spending classification that permits to classify banking transactions into categories, while blinding the service provider to the transactions, and

- an electrocardiogram signals classification which can be used to help a practitioner detecting any arrhythmia, using a third party service that does not learn any information about the patients’ electrocardiogram.

Full Article

“Your sensitive data is useful while your privacy is protected.”

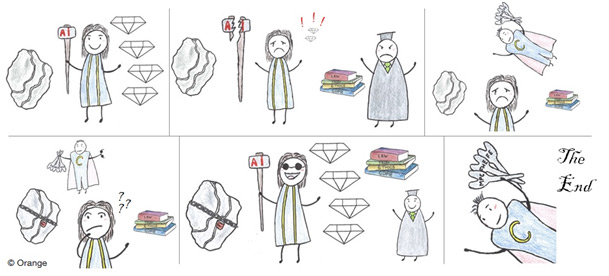

Data is a pure diamond, and Artificial Intelligence (AI) is the hammer that allows transforming it accurately[1],[2]. AI algorithms are today widely deployed and permit to manage services such as search engine, recommendation, threats detection, targeted ads, service improvement or medical help.

However there are also constraints related to the manipulation of personal and/or sensitive data.

Indeed, regulation could be a serious impediment to the multiplication of those algorithms. Telecom regulation does not permit a telecom operator to manipulate any communication data that travels inside its infrastructures. Healthcare regulation restrict the way a medical data can be processed (and by whom). More recently, the new General Data Protection Regulation (GDPR) limits the way the data of individuals can be used in the above mentioned services.

The way some parties can manipulate sensitive and personal data is also closely related to the ethic and responsibility concerns, and should be handled accordingly. The way the end-user feels confident in the manner someone can manipulate his own data should also be taken into account.

Last but not least, the set of data needed for AI algorithms may have to be stored in some servers that may be subject to some cyberattacks. A data leak would be very problematic for the service provider, legally and regarding their brand image.

Technical solutions should be devised, to be capable of

- permitting AI algorithms to be executed as usual with a big data set; while

- being in accordance with regulation and protecting those data.

In fact, there are two different possibilities. The first one is anonymization, which irreversibly alters data in such a way that it cannot be possible to re-identify someone directly or indirectly in the dataset. This technique has the advantage of removing the constraints of the GDPR. But it also has two main disadvantages. First of all, it is sometimes very difficult to achieve, especially when the data are very complex (such as log browsing or Call Data Reports) or when it is possible to cross the data with other sources. Secondly, as all the data related to an individual is removed, it is not possible to provide a personalized service to each individual anymore. Thus, anonymization is not a universal solution to our problem.

So, let us now focus on one possible second technical solution: encryption.

Cryptography is indeed a solution to privacy preserving AI. The basic idea is simple: one encrypts the data with an encryption scheme permitting to manipulate it in blind, without having any access to the decryption scheme. It seems magic, but such kind of encryption schemes exists: homomorphic encryption[3],[4], multi-party computation[5] or functional encryption to name a few.

But of course, this is not so simple.

At first, if one takes an AI algorithm and tries to give it a set of encrypted data on input, the algorithm will not work. The first step is then to rewrite the algorithm so as to make it “crypto-friendly”. In fact, this is a crucial step and, if it is poorly processed, the result will be poor and/or not useful enough. The final process on the encrypted data should be fast enough and the final result should be as accurate as if the data were not encrypted.

Regarding the first point it is necessary to treat at the same time three aspects:

- the design of the cryptographic specifications should be the most efficient possible, and done appropriately by cryptographic experts;

- the implementation of the cryptographic operations should have very good performances, using techniques from experts to improve the final results;

- the “crypto-friendly” description of the algorithm to be executed on encrypted data should be done so as to not decrease the accuracy of the result too much.

More importantly, those three items should be treated in parallel so as to obtain the best possible result. To date, even if any algorithm can theoretically be treated in such a way, it is not possible to promise that such technique will work for any algorithm. For some of them, this is not feasible today since the overall result will be very poor, and totally irrelevant for a real world deployment. For some others, the current maturity is very good and a real deployment can be considered now. This is exactly the case of the two following examples that have been studied and implemented. More precisely, in both cases, the training phase cannot be done on encrypted data, as it requires a quantity of computations far too important for current capabilities of cryptographic techniques. However, the inference phase is definitely ready for the real world.

First, let us consider a multi-bank automatic spending classification. Any bank can build and use a model (e.g., as done here, with a Bayesian network) to classify each transaction of its client so as to tell whether this transaction corresponds to a spending related to e.g., healthcare, multimedia, or food. Classification services in a multi-bank setting also exist (see e.g., Bankin’ or Linxo) but these services have access to all end-users’ transactions in plain, which clearly causes privacy leakage. Our idea is then to (i) locally aggregate by the end-user the set of transactions coming from his/her different banks, (ii) locally encrypt the set of transactions, (iii) send the whole to the classification service that (iv) performs the classification, only manipulating encrypted transactions, using a previously trained Bayesian model. At the same time, the end-user does not learn anything about the model trained by the service provider: each actor can then protect his own sensitive inputs.

The basic idea behind the Bayesian network is to compute the probability that a transaction falls into each possible category, and then retrieve the most probable one. The solution developed in the context of the ANR PerSoCloud project[6] is based on the so-called multi-party computation cryptographic technique, and a new patented solution used to compare two encrypted values. The Bayesian network has been developed by Cozy Cloud[7], member of the PerSoCloud consortium. The obtained performances give about 2 seconds to classify a transaction, and show that such service is today close to a real deployment.

The second use case concerns ElectroCardioGram signals (ECG) classification, as a key procedure for heart disease diagnosis. For several years now, the signal processing research community has studied and proposed AI algorithms that are both efficient and accurate for this purpose. Such algorithm aims at helping practitioners to detect potential arrhythmia by proposing external performing classification services. However, disclosing ECG data to third parties poses serious privacy concerns. Therefore, a recent area of research, at the intersection of signal processing and cryptography fields, focuses on processing encrypted ECG data. While efficient implementations of ECG data classification exist in the plaintext domain, it is not trivial to implement them in a privacy-preserving way. In the context of the H2020 PAPAYA project[8], a solution to this problem has been proposed. At first, a new neural network dedicated to ECG classification has been designed, and is perfectly compatible with homomorphic encryption. The service then works as follows: (i) the practitioner encrypts the ECG signal and sends it to the service provider, (ii) the latter infers the possibility to detect a kind of arrhythmia for this ECG (in the encrypted domain) and sends it back to the practitioner that (iv) decrypts and displays the result before taking a decision. The designed prototype shows promising accuracy and efficiency results, necessitating about 1200ms to process 2048 heartbeats (~29min of recordings) with 96.24% accuracy!

Conclusion

Artificial intelligence is a powerful technique that strongly uses data to respond to multiple services. In parallel, modern cryptography offers extremely powerful tools to protect such data, and meet the requirements related to data protection, particularly in the context of artificial intelligence. The combination of those two technologies is a great promise for the future. It already meets multiple use cases but still requires time to be universally deployable.

Figure: Private extraction of Data Diamonds

[1] Six uses of AI that serve a more humane society

[2] Artificial Intelligence, between hopes and fears for Humankind

[3] Homomorphic encryption: the key to security

[4] Towards Video Compression in the Encrypted Domain: A Case-Study on the H264 and HEVC Macroblock Processing Pipeline

[5] https://eprint.iacr.org/2019/427

[6] Funded by the Agence Nationale de la Recherche (ANR)

[7] cozy.io

[8] Funded by the European Commission: www.papaya-project.eu