Delivering a service in virtualised networks

Network virtualisation has a huge impact on how both telecom infrastructure and services are managed. Here is an example illustrating some of the benefits.

A carrier wants to offer an augmented reality service to a warehouse manager in a factory. The end users of this service are, for example, factory technicians, using connected glasses. The service consists of overlaying useful information about the products stored in the warehouse onto the view of the worker examining these products.

This work resulted in four scientific papers and a demo

As part of this augmented reality service, the video streams coming from the connected glasses are transmitted to a processing server, which adds the useful information to the image. For this purpose, the processing server needs to access information stored in a database. The data is encrypted, so the service also needs a decryption function. The service in this example is therefore composed of three functions—each executed in a different container—called the “service chain”. For this service to work correctly and to avoid a lag between what the technician sees and the information reported, the latency between the user and the application server must not be too high (for example, less than 15 ms). If the processing time for the three functions is 5 ms, this leaves a maximum of 10 ms for communications to take place between the three containers and the end user. The operator of this service will therefore enter into an SLA (Service Level Agreement) with the infrastructure provider to guarantee that this latency will not be exceeded for reasons directly related to the infrastructure (overload, failure etc.). Deploying and managing such a service requires decisions about where to place service chain elements on the infrastructure, to ensure that the entire chain complies with the relevant SLAs. The closer that these elements are deployed to the user, the lower the communication latency will be. However, since compute resources are more limited at the edge of the network, they are generally more expensive. There is therefore a compromise to be found between investment cost and latency. The ideal scenario would be to deploy the service at the lowest possible cost but with a sufficiently low latency to comply with the SLA.

In addition to the latency criterion, container placement must take into account the sharing of infrastructure within a data centre since it is necessary to ensure that the services deployed have sufficient resources (compute, memory, storage) to run smoothly regardless of the server load.

Finally, the investment decision must consider security, with criteria for isolating services.

Service isolation

Although the physical infrastructure is shared among all services, some services handling sensitive data need to be isolated. A service is able to access the data of another, particularly through “auxiliary channel” techniques which can be used to steal confidential data. In some cases, these attacks can go as far as “data poisoning” (the attacker modifies data that does not belong to them) or even attacking the availability of physical resources on the server.

It is theoretically possible to have a dedicated infrastructure in the cloud because it has sufficient resources. However, in edge cloud, it becomes unrealistic to have a dedicated physical infrastructure for all critical services as this would require far too heavy an investment.

To remedy this, pseudo-isolation is implemented, where sensitive services are physically isolated from services lacking security. To this end, each function of a service chain defines an isolation level (from 1 to 5) corresponding to its requirements. This can be defined in the contracted SLA. Level 5 is reserved for services at state level or those linked to legal obligations, level 4 is dedicated to critical industrial applications (within the meaning of the NIS 2 Directive), and so on, down to level 1, aimed at consumer services that do not have a specific security need.

In addition, each function of a service chain is given a trust level. This can be determined with a security assessment process, such as an audit, on the following criteria, for instance:

- Container image vulnerability scanning or audit,

- Service development process (programming language, coding practice, libraries used etc.),

- Service chain testing process.

The trust level is determined by the infrastructure provider. If the service owner does not want to submit its service to the security audit, then it is given the lowest possible trust level. Similarly to the isolation level, the trust level can be set on a scale from 1 to 5.

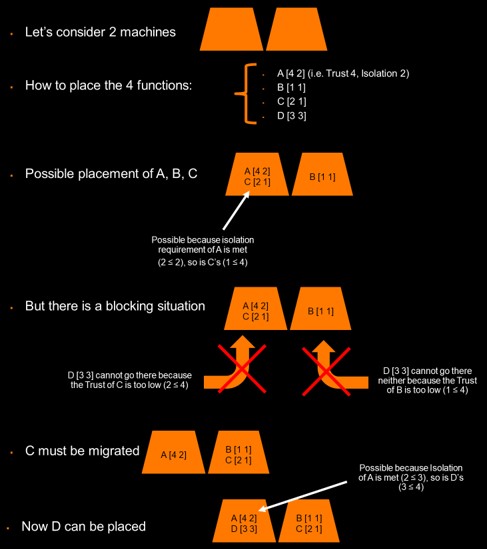

The service chain isolation can then be applied, by making sure that two functions are physically co-located if a criterion related to the isolation requirements and trust level is met. Such a criterion could be, to give simplified example, that the trust level of one is higher than or equal to the isolation level of the other. This way, it is ensured that sensitive functions are only co-located with secure ones.

This pseudo-isolation approach has several advantages over physical isolation. First, it helps to limit costs by not imposing a dedicated, static use of infrastructure resources for critical services. Another advantage is that this kind of security isolation is flexible — it can be deployed on demand to meet a specific, time-limited need. This makes it possible to optimise resources and costs. For instance, isolation can be set up when a maintenance operation is in progress, i.e. when the risk of security breaches is higher, and then it is released when the operation is finished. This adaptation of infrastructure nodes to the dynamic security needs of users, assuming that physical co-location criteria are used, makes it possible to optimise infrastructure costs (only the necessary resources are reserved at a given moment to operate highly critical services). However, taking into account this type of constraint makes it even more difficult to place chain functions, as shown in the example below.

Example of pseudo-isolation

Therefore, determining how to place, manage and orchestrate service chains in a way that takes into account all the constraints and needs of each service, while minimising the resources used in infrastructures presents quite a challenge. This challenge is modelled and solved using techniques and methods from the field of mathematical optimisation, from which efficient algorithms are derived. Integrated at the centres of networks, these algorithms will automate service lifecycles while offering strong guarantees on the quality and security of these services and rationalising the use of resources.

Cross-cloud orchestration

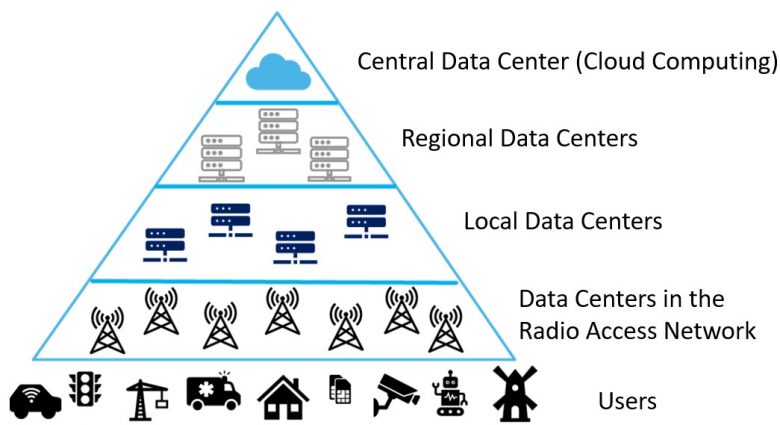

This article presents a research problem related to network virtualisation, namely how to place and orchestrate deployed services in the cloud-edge computing continuum, with very diverse constraints such as quality of service or security isolation. The cloud-edge computing continuum is broken down in the following figure:

Overview of the cloud-edge computing continuum

The orchestrator can run containers at the central level (which corresponds to cloud computing), or in data centres closer to the users (edge computing).

When the orchestrator decides where to place the functions of a service, they are not necessarily obliged to use data centres from a single provider. This therefore opens up new possibilities for orchestrating services in a cross-cloud environment (i.e. composed of data centres from different providers, including a private cloud). The benefits are as follows:

- Orchestration means specific service requirements (SLAs) can be taken into account

- Orange could act as a B2B (business-to-business) broker, selecting the most cost-effective deployment environment that is best suited to the services to be deployed.

- A Moving Target Defence (MTD) service becomes possible. Containers of a service that requires special security are moved regularly, in order to prevent potential attackers from gathering information about the execution environment. This makes any attack on these containers particularly difficult.

Conclusion

Orange has designed algorithms based on mathematical optimisation to determine the placement of containers while complying with all the requirements of this service (chaining, latency, security etc.). Then Orange implemented these algorithms in a prototype of a multi-cluster orchestrator.

This approach, based on “demonstrable” tools, is of interest in the context of our work on the future certification of our infrastructures (EUCS/EU5G), in particular to address certain security needs that are difficult to establish using current state-of-the-art tools.

This work resulted in four scientific papers and a prototype demo was presented at Orange Open Tech Days, Orange Gardens, in November 2023. This demo presented a dashboard for the edge computing platform that showed the load (CPU and memory) of the clusters, the communication latency (intra-cluster and inter-cluster) and the cost of using the clusters, all in real time. The dashboard settings make it possible to monitor key performance indicators and in particular to observe the effect of the latency degradation of a cluster on the deployed service chains, then restoring the required SLA level through the migration of functions. The demonstration was presented to about 300 visitors over 3 days and generated a great deal of interest and inspiring discussions.

Further down the line, these placement algorithms will take energy consumption into account, so as to reduce the carbon footprint of services as much as possible. In addition, the demo will incorporate new cloud providers (Amazon Web Services, Google Cloud provider) to demonstrate the feasibility and relevance of a cross-cloud approach.

Glossary :

Container

A lightweight virtual machine, since the container only virtualises the software layers on top of the operating system.

Cloud-edge computing continuum

The continuous integration and orchestration of IT services between the centralised cloud and edge computing. This approach aims to optimise performance and security by processing data as close to its source as possible, while using cloud resources for more intensive or global tasks.

Cross-cloud

A platform composed of data centres from different providers including private clouds.

NIS 2 Directive

The European Parliament and Council of the European Union have adopted the NIS (Network and Information Security) Directive. This directive aims to increase the level of cybersecurity of major players in ten strategic business sectors.

Combinatorial optimisation

A branch of optimisation in applied mathematics. Its goal is to find the best solutions for problems with a large arrangement of elements to be combined.

Orchestration

In a cloud context, orchestration refers to the automation of the management of SLAs (Service Level Agreements) — contracts between providers and customers that define the minimum level of service quality required by the customer, to which the provider commits. In our case, an SLA is established between a service provider and an edge cloud operator (e.g. Orange), who provides the cloud infrastructure on which these services are based.

Virtualisation

Creation of a virtual version of a computing resource such as a server, operating system, storage device or network resources. It makes it possible to divide a physical resource into multiple isolated virtual environments. This enables a more efficient use of resources.