Introduction

Over the past ten years or so, research on artificial intelligence (AI) has made considerable progress[2]. Fuelled by the web’s huge databases and ever greater computing power, machine learning methods have made spectacular progress in image recognition, machine translation and natural language processing. In many sectors, the use of algorithms is transforming social activities and the organisation of work. AI-based systems are also becoming a part of everyday life, with the sale of voice assistants and social robots. The increasingly widespread use of these technologies, particularly in sensitive fields such as administration, justice, health, security and social interactions, is prompting hopes and fears in equal measure, and contributing to the emergence of the field of “AI Ethics”. This field initially emerged as a criticism of algorithms seen as “black boxes” (Crawford 2021; Pasquale 2016), the aim being to increase their “explainability” and the “transparency” of their goals. More recently, attention has also been paid to the data chosen to train algorithms, to fairness and equity through bias correction, and to non-malfeasance, responsibility and privacy (Jobin et al. 2019).

Various discourses abound in the public space helping to make sense of AI: media stories, comments, analyses, recommendations, warnings, expert reports and ethics guidelines. This media coverage has even led to the adoption of public regulations, as demonstrated by the recent draft regulation on artificial intelligence put forward by the European Union.

In this article, we focus on the processes by which AI is publicised and on the circulation of discourses among the scientific, media and political arenas. How has AI emerged as an object of public attention in France? How is this object shaping new public arenas, stakeholder networks and research funding methods? What are the main arguments being made for and against the use of AI systems? Using a combination of quantitative and qualitative methods, we have studied various places in which controversies are produced: the press, public reports, scientific laboratories and private organisations (see methodology box). Quantitative network and text analysis tools were used to systematically process a large number of stakeholders and articles with various tones and themes in order to identify the structure of debates in the public sphere. Interviews with stakeholders in the controversies shed further light on the results of the quantitative analysis and allow us to understand how journalists and scientists have perceived and contributed to the publicising of AI.

The media arena: a discourse of disruption

Our study of the media arena is based on a very large corpus of articles (35,237) taken from the main national and regional daily newspapers, as well as a few weekly magazines, between 2000 and 2019. This corpus provides an unprecedented insight into nearly twenty years of media treatment of AI in France.

The first finding was that there was an exponential increase in the number of publications per year during this period: while 700 articles were published in 2000, that figure had increased tenfold by 2018, with 7,288 articles, a peak due in part to the submission of the Villani report “Donner un sens à l’intelligence artificielle” (“Giving meaning to artificial intelligence”)[3]. The growing presence of debates on artificial intelligence in the generalist press is one of the most immediate signs of their expansion beyond specialist spheres. The media play a central role in publicising the questions and troubles affecting the various stakeholders in AI – producers, researchers and users. They also help to establish a framework for the reception of these technologies and to put new questions on the public policy agenda.

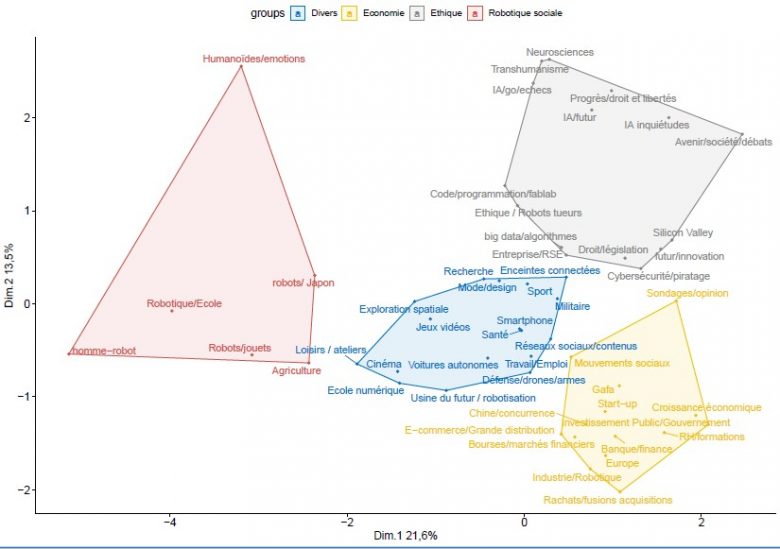

A more detailed thematic study of the corpus shows that ways of talking about AI have changed. The creation of a thematic model to qualify the main issues covered in the press reveals four broad topics of debate (see Figure 1).

Figure 1 – The four topics of debate

The first, significantly overrepresented in the regional press, focuses primarily on human-machine interactions and the deployment of robots in schools or hospitals. It is closely related to a second, relatively central, topic, which looks at the sector-specific implications of AI, particularly in industries. Taking more of a macro view, another topic is the economic stakes of AI, particularly in terms of growth or commercial independence from giants such as China and the United States. The fourth and final topic deals with the ethical issues surrounding AI, from protection of privacy to algorithmic discrimination, focusing on concerns about present developments of these technologies, but especially the developments to come. This ethical dimension took on increasing importance from the mid-2010s on, becoming a dominant way of framing questions linked to artificial intelligence.

Ultimately, what dominates in the press is a discourse of disruption, making liberal use of popular science fiction references to emphasise the major transformations innovations in artificial intelligence would bring. The interviews with journalists show the extent to which this discourse is bound up with strategies and constraints specific to the media arena, where journalists must constantly find ways of interesting their readers – but especially their editors – in these often complex technical issues. In the process, newspapers sometimes parrot the AI marketing discourse developed by the Big Five tech giants, a discourse designed to provoke fascination and spark a craze for these technologies, which are being ever more commonly deployed in everyday objects, from smartphones to smart speakers.

The scientific arena: a discourse of continuity

In contrast to this media discourse of spectacular disruption, the scientists interviewed primarily present a discourse of continuity, questioning the very notion of AI, which they consider too vague and polysemic. Their accounts describe the progressive, cumulative development of a family of technologies that would later be grouped together under the label “AI”. It is a long, complex history, made up of gradual and diffuse advances in computer science and neighbouring fields, rather than of spectacular discoveries. This story mixes the approaches of symbolic AI (based on simulating the manipulation of symbols by human reasoning) and connectionist AI (based on replicating the functioning of neural networks) rather than presenting them as opposites. For the AI researchers interviewed, the opposition between these two approaches was created by certain commentators – particularly from the industrial world – as a driver of scientific progress, hailing the recent victory of the second approach. In reality, however, the rise of connectionist AI is above all driven by the convergence of favourable technological developments (increase in computing power, availability of learning databases from the web).

The media discourse of technological disruption behind the “AI wave” is highly criticised by scientists in the field. For them, it is an artifice. The media and political buzz surrounding AI does not reflect the reality of scientific discoveries, but media framing based on the “next big thing” principle:

“In the journalistic arena, it’s all about the latest craze. Five years ago, 3D printers were the topic everyone was talking about. […] Back then, journalists thought that was the thing that was going to change the world, then it was rendered somewhat obsolete by the Bitcoin and blockchain craze, which in turn was forgotten about when the obsession with AI arrived. I don’t know what the next one will be. I don’t think it reflects a scientific or intellectual reality. It’s very much bound up with the way politics and the media operate.” (Interview with a computer scientist)

A second major finding from the qualitative aspect was that the media arena and the science arena are writing competing timelines, both for AI and for matters concerning its ethical framework.

For the scientific community, the scientific event that launched connectionist AI was the 2012 development of an image processing system based on neural networks, which offered better performance levels than conventional systems. Presented by Geoffroy Hinton and his team from the University of Toronto at the annual European Conference on Computer Vision, the system beat all other image recognition algorithms:

“So, people started to talk about it, but it was scientific, and it was above all the vision community who were talking about it at the time. (Interview with a robotics expert)

And yet, it was only in 2016 that AI entered the public sphere. There was no corresponding scientific discovery at this point, but there was extensive media coverage of certain technical feats, such as the live YouTube streaming of the 2016 victory of the Alpha Go system, developed by Google Deep Mind, against world Go champion Lee Sedol:

“AlphaGo, an AI system, winning against the world Go champion caused a media sensation, because it was a highly symbolic moment. […] Journalists rushed to their phones to call artificial intelligence experts to ask them what was going on. […]. I think that was a pivotal moment. Before, it was a subject that interested only certain groups, or no one at all, but that event attracted the attention of the mainstream media.” (Interview with a robotics expert)

“I think the media obsession with AI began with AlphaGo’s victory over Lee Sedol, which probably received excessive media coverage compared to Deep Blue’s victory over Kasparov.” (interview with a computer scientist)

In the experts’ view, the media coverage that brings AI into the public sphere is driven to a great extent by digital companies seeking to make money out of their data and promote their services:

“All the companies that were digital tech companies have become – well, actually they have remained what they were – but they have been labelled ‘artificial intelligence companies’.” (interview with a robotics expert)

The chronological dissonance between the media and scientific arenas also has an effect on the treatment of ethical matters. Though AI Ethics only became a subject of coverage in the French press in 2015 (see above), it has been a matter of great interest to robotics researchers since the 2000s, due notably to advances in autonomous weapons and human-machine interaction:

“Because the field has reached maturity, there are things that are no longer research subjects, but possible applications, even though their development is not necessarily that well advanced. So, people are wondering about their impact on society and on human beings. Before, we were working, we had robots in the labs, and we were thinking of maybe sending a robot to Mars.” (interview with a robotics expert)

In this context, well before the “AI wave” driven by promotional discourse and the subsequent emergence of a host of ethics guidelines, the very first international symposium on “robot ethics” was held at San Remo school of robotics in Italy in 2004. In France, robotics researchers have been working on the ethics of robotics research since 2012 as part of the Allistene Committee for the Ethics of Research in Information Sciences and Technologies (CERNA) (see CERNA 2014).

The emergence of ethical reflection on AI in the scientific arena therefore long predates the media coverage of the progress made in machine learning techniques and neural networks, which came ten years later. The “ethics” of AI appears as a vector for the circulation of controversies between the scientific, media, economic and political arenas.

The political arena: a discourse of ethics

Compared to the media and the experts, the political world was relatively late to engage with the controversies of artificial intelligence. From 2017 onwards, however, we saw several reports from public institutions (the French Parliament, the French Data Protection Authority (CNIL), the Prime Minister, the European Union) assessing both the potential and the risks associated with the development of these new technologies. The most emblematic is definitely the Villani report “Giving meaning to artificial intelligence”, which was based on no fewer than 407 expert contributions. Published in March 2018, this report prefigured the adoption of a vast investment plan for the development of artificial intelligence in France and a government commitment to take account of the ethical stakes of AI. This commitment is reflected in the launch of the Tech for Good network, which brings entrepreneurs and politicians together to develop responsible technology for worthy causes.

Discussion of AI controversies in the political arena therefore centres around ethical issues, as a way of approaching various troubles emerging in the public sphere. Public reports are thus drawing and building on the ethical thinking done in the scientific arena from the early 2000s and turning it into a public policy instrument. This circulation owes much to the involvement of first-rate researchers, be they from public or private laboratories, in the drafting of these public reports. Examples include Cédric Villani, who we have already mentioned, as well as Nozha Boujeema (INRIA) and Raja Chatila (ISIR-CNRS). Instead of imposing binding regulations on AI, the reports take an ethical approach, advocating softer, more flexible regulation that does not hinder technological innovation. It is a question of appealing to the sense of responsibility of economic and scientific players to guarantee the reliability of AI systems and ensure that they respect civil rights and liberties, through the adoption of shared guidelines or new internal assessment tools. This ethical discourse also involves spreading principles such as the “transparency” and “loyalty” of algorithms[4], new imperatives for the development of artificial intelligence. However, these principles have turned out to be difficult to evaluate in practice. A more detailed study of the interactions caused within an organisation by the assessment list for trustworthy AI proposed by the European Union shows the difficulty personnel have, be they lawyers or engineers, in translating and adapting their practices to these new and often abstract constraints.

More generally, the politicisation of ethical issues makes them the subject of battles between scientists, public policymakers and AI producers. Though manufacturers have played a major part in inflating ethical discourse in the public sphere, they now fear the introduction of new regulations that could restrict the industrial development of artificial intelligence. The conflict therefore hinges as much on who has the most legitimacy to set ethical standards as it does on the ways in which these standards should be applied (soft law[5], technical solutions or new regulations). The emergence of AI as an object of public attention and the establishment of “AI Ethics” as a category of public action are being accompanied both by a reconfiguration of scientific careers (with new traffic between the public and private sectors) and a shifting of public funding to projects that juggle economic, political and scientific interests.

Conclusion

The combination of quantitative and qualitative methods used in this study offers a new angle on a subject that is provoking plenty of debate, looking at its development over time and its multiple facets, which contribute to the uncertainty and the feeling of confusion caused by recent developments in AI for the general public. While the rise of AI as a topic of media discussion owes a lot to real advances in machine learning, discourse inflation is above all the result of the mobilisation of stakeholders in the public sphere. It is the AI producers themselves who, amid ever fiercer competition, have encouraged the spread of an ambiguous discourse of technological disruption – both enthusiastic and concerned – about the potentialities of the new systems. Translated into media language, this communication by big tech companies contributes to the circulation of controversies on AI, from scientific arenas to the public media sphere.

The growing controversies surrounding artificial intelligence have ultimately driven the emergence of “AI Ethics” as a new “public issue”. Indeed, the development of AI is presented as bringing with it many risks, which must be addressed by formulating ethical principles and implementing them within working collectives.

The emergence of AI Ethics as a public issue is the fruit of multiple mobilisations, first within the scientific arena itself, and then, little by little, on its periphery, with the appearance of hybrid research centres calling on both public and private researchers to produce ethical recommendations. Nevertheless, the emergence of this public issue is far from complete, and the action programmes remain uncertain, as ethical discourse brings various stakeholders into conflict not only on the reality of the dangers but on ways of responding to them.

The lessons to be learned from this study for Orange are multiple: understanding the way we talk about AI means understanding the expectations and fears it provokes, but above all the way in which these worries and enthusiasms are constructed through the intervention of multiple players over time. Analysis of the discourses present in the public sphere reveals how the meaning of AI is socially constructed. It shows stark oppositions between stakeholders and visions of technologies, which suggests we should abandon a monolithic approach to artificial intelligence and its ethical framework.

[1] A testimony of this dynamic is the call signed by more than a thousand experts – and the media hype that accompanies it – alerting to the “major risks for humanity” that AI could take and demand a six-month break in the development of systems beyond Chat GPT-4 (“Pause Giant AI Experiments: An Open Letter”, https://futureoflife.org/open-letter/pause-giant-ai-experiments (accessed 07/07/2023).

[2] For a detailed version of this research see: BELLON Anne, VELKOVSKA Julia, «Artificial intelligence in the public space: from the scientific field to the public problem. Investigation into a Controversial Publicizaton Process”, Networks, 2023/4 (No. 240), https://www.cairn.info/revue-reseaux-2023-4-page-31.htm

[3] All the signs suggest that this growth has slowed slightly and that we are seeing this “media bubble” on AI shrink: there were still some 7,635 articles published in 2019, but only 4,618 in 2020 (the Covid year) and 5,137 in 2021.

[4] According to the French Data Protection Authority (CNIL) “a loyal algorithm should not have the effect of causing, reproducing or reinforcing any discrimination of any kind, even without its designers’ knowledge”

[5] Soft law is made up of tools and systems comparable to rules that aim to change or guide behaviour without creating binding obligations.

Methods :

- Quantitative aspect: analysis of the French written press over 20 years (2000- 2020), (n=35,237). Quantitative textual and network analysis

- Qualitative aspect: 17 interviews with stakeholders in controversies surrounding AI: 11 scientists (robotics, computer science, cognitive sciences, philosophy), 4 journalists, 1 executive in a tech company, 1 senior civil servant (2019-20)

- Documentation: 13 public reports from scientific organisations (CERNA, IEEE) or political/administrative organisations (OECD, CNIL, EU) in France and worldwide (2006-2019).

Sources :

- AI Act – https://artificialintelligenceact.eu/fr

- Bellon A. (2020). “L’intelligence artificielle en débat. De la bulle discursive au problème public”. Orange Labs research report

- Collectif CERNA. (2014). Ethique de la recherche en robotique. http://cerna-ethics-allistene.org/digitalAssets/38/38704_Avis_robotique_livret.pdf

- Crawford, K. (2021). Atlas of AI. Power, politics and the planetary costs of Artificial Intelligence. New Haven and London: Yale University Press.

- Jobin A., Ienca M., and Vayena E. (2019) “The global landscape of AI ethics guidelines”, Nature Machine Intelligence, vol. 1, No. 9, 389‑399.

- Pasquale, Frank. (2016). Black box society : the secret algorithms that control money and information. Harvard University Press.

- Schiff D., Biddle J., Borenstein J., and Laas K. (2020) “What’s Next for AI Ethics, Policy, and Governance? A Global Overview”, 2020, p. 153‑158.

Read more :

- AI Act – https://artificialintelligenceact.eu/fr/

- Beaudouin V. et Velkovska J. (dir.) (2023) « ‘Ethique de l’IA’ : enquêtes de terrain », Réseaux n° 240

- Bellon, A. & Velkovska, J. (2023). « L’intelligence artificielle dans l’espace public : du domaine scientifique au problème public: Enquête sur un processus de publicisation controversé ». Réseaux n°240.

- Beaudouin, V. & Velkovska, J. (2023). « Enquêter sur l’« éthique de l’IA »». Réseaux n° 240.

- Bellon A. (2020). « L’intelligence artificielle en débat. De la bulle discursive au problème public ». Rapport de recherche, Orange Labs

- Collectif CERNA. (2014). Ethique de la recherche en robotique. http://cerna-ethics-allistene.org/digitalAssets/38/38704_Avis_robotique_livret.pdf

- Crawford, K. (2021). Atlas of AI. Power, politics and the planetary costs of Artificial Intelligence. New Haven and London: Yale University Press.

- Jobin A., Ienca M., et Vayena E. (2019) « The global landscape of AI ethics guidelines », Nature Machine Intelligence, vol. 1, no9, 389‑399.

- Pasquale, Frank. (2016). Black box society : the secret algorithms that control money and information. Harvard University Press.

- Schiff D., Biddle J., Borenstein J., et Laas K. (2020) « What’s Next for AI Ethics, Policy, and Governance? A Global Overview», 2020, p. 153‑158.