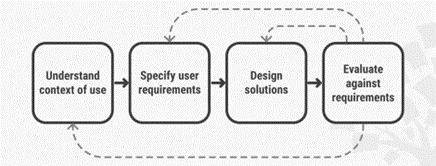

Understanding learning-based AI—especially deep learning AI—is highly challenging, due in part to the fact that the AI models they generate are so-called “ ”. These difficulties have a bearing on how AI systems (AIS) are used. For example, a physician may want to understand why an AIS has diagnosed a certain disease or recommended a treatment. A customer service agent may want to understand why an AIS recommends making a particular offer to a customer. Faced with these types of situations, efforts are focused specifically on generating and providing explanations to the relevant people. This is what is meant by explainability, a field which aims to find ways to account for or explain results produced by AIS [Explainability of artificial intelligence systems: what are the requirements and limits?]. Today there are a wide range of methods aimed at generating such explanations [How to make AI explainable?]. But how should explanations needed by end users be produced? Developed in the areas of ergonomics and human-machine interaction and long used to design interactive systems in different fields, the user-centred design approach (Figure 1) has proved particularly relevant — and is increasingly being used in research on AI explainability in different fields [1].

The main challenges in explainability are to ensure that AIS are intelligible and are tailored to users, their goals and their activities.

Figure 1. The User-Centred Design Approach [2]

Analysing the context of use is essential to establishing the prospective recipients of explanations (who are they?) and what they need from these explanations (for what activities will they need explanations? To do what? When? For which AIS would it be best to generate explanations?). For any AIS intended to be used in a work environment, understanding these activities is fundamental to determining appropriate explanations. To this end, different data collection methods may be used (interviews, observations, literature review). This phase is also crucial to formulating explanations tailored to a specific target user. For example, in the context of an AIS designed to recommend a product to a customer, a technical explanation intended for a data scientist would not be suitable for a customer service agent. For a customer service agent it would not be useful if, for instance, the explanations encompassed all the variables that might have influenced the AIS’ recommendation. Relaying two or three key factors may be sufficient.

After analysing the context, the second step involves specifying exactly what necessary (or potentially useful) explanations should be provided to different recipients. These explanations may come in various forms: they may be contrastive (why one prediction was made rather than another), counterfactual (by altering a variable to show how it influences the prediction) or based on examples or on the weighting of contributions from different variables. The type of explanation must then be chosen according to the context of use and user requirements. This step also involves specifying how these explanations will be provided (How should they be presented? How detailed should they be? How will they be accessed? Via what kind of user interface? etc.). This can be done by constructing usage scenarios illustrating potential uses of the explanations.

The third step involves actually designing the explanations and the medium through which they will be accessed: the user interface (how explanations are formatted, interacted with etc.). There is a large body of knowledge on HMI (human-machine interaction and user interface) design that can be tapped during this phase for these aspects of HMI. At this stage, a mock-up or a prototype of how the explanation will be presented is produced. It is important to keep in mind that in addition to the explanations themselves, a user interface must also be designed to present them, which will involve user interaction. The goal is therefore not only to develop the explanations, but also to create a user interface that communicates them.

In the fourth stage, the result of the previous stage—i.e. the mock-up or prototype of the explanation tool—are evaluated. Various evaluation methods exist, with the most foundational being user testing, most often performed in a laboratory setting. Testing involves exposing potential users to a system so it can be evaluated against defined criteria, such as or usefulness. Depending on the context of use, explainability may be evaluated against criteria such as:

- The suitability of the explanations for their intended use (the purpose of the explainability): for example, to what extent do the explanations allow the recipient to achieve their goals (for example, understanding why a system made a diagnosis of a particular disease, why a system suggests recommending a particular sales offer to a customer)?

- The tailoring of explainability to recipients: for example, to what extent are explanations (in particular their content) tailored to recipients (for example, to their specific expertise)?

- The intelligibility of the explanations provided: for example, to what extent can they be understood and interpreted by the target recipients? What is the “cognitive cost” needed to understand them?

- Trust in the system: for example, to what extent does the recipient consider the explanations provided to be reliable or accurate, what impact do they have on the user’s trust in the system?

- Understanding how the system works: for example, to what extent do the explanations promote a more comprehensive understanding of the system? Is such an understanding useful or even necessary?

User tests can be carried out using specific questionnaires. One example is the System Causability Scale [3]. In this approach, “causability” aims to determine the extent to which an explanation of an AI system’s output to a user achieves a specified level of causal understanding with effectiveness, efficiency and satisfaction in a specified context of use. There are other broader usability scales that can also be employed [4].

While they are essential to the evaluation, user tests performed outside a real-life use scenario (for example, in a laboratory) are not sufficient. It is also important to carry out real-world evaluations over long periods of time. For example, it may be valuable to study the effects of providing explanations on how users’ relationship to the system changes in terms of trust.

Involving the target recipients for the explanations at each stage of the user-centred design process is also key. This involvement can take different forms — from meeting potential recipients to understand what they do and jointly define what they need from explanations, to showing them example results (mock-ups/prototypes) or enlisting them for user tests. It is also important to understand that explainability is only one aspect of interacting with an AIS. It only makes sense for an AIS that would (potentially) be relevant in terms of use and that functions reliably.

Finally, from a technical standpoint, caution should be exercised with regard to the explanations that can be obtained; they could be misleading or incomplete. It is therefore important to ensure that the explanations are reliable and to clearly define their limitations in terms of explaining how an AIS functions, particularly for black box models. Choosing the right type of explainability technique is therefore crucial.

Conclusion

User-centred or, more broadly, human-centred design is an approach that has become highly important for research on explainability and artificial intelligence at large.

The main challenges in explainability are to ensure that AIS:

- are intelligible and are tailored to users, their goals and their activities,

- are as usable as possible, without causing harm by affecting those activities or impacting users or any person who may be indirectly impacted by the use of these systems.

An AI model is a program that has been trained on a dataset to perform tasks, such as categorising images or sounds, providing estimates or producing content. The model is the program produced after training. A model is deemed a black box model when its workings are opaque and difficult to understand.

The degree to which a system can be used by identified users to achieve defined goals with effectiveness, efficiency and satisfaction in a specified context of use.