Network APIs: The New Frontier of Digital Innovation

• Networks now provide access to data that can enrich the customer experience through APIs.

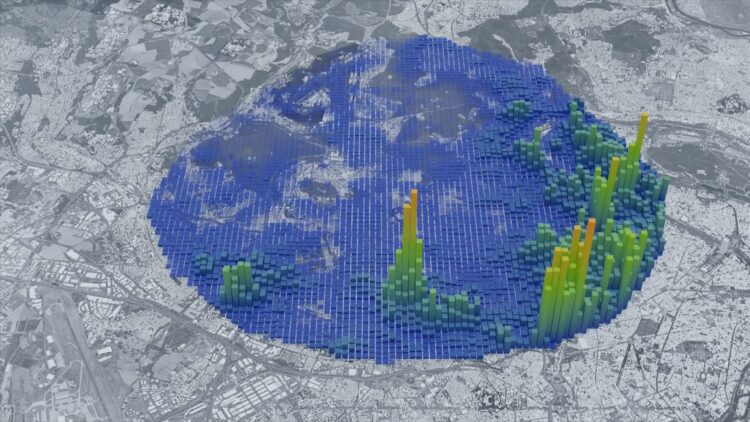

• For example, the Population Density Data API, launched by Orange LiveNet, leverages the FluxVision service and enables the optimization of planning for services of global and public interest (urban planning, transportation, retail).

• This API, along with others coming soon, in addition to those already available at https://developer.orange.com, allows third-party players to offer new services.

Read the article

• For example, the Population Density Data API, launched by Orange LiveNet, leverages the FluxVision service and enables the optimization of planning for services of global and public interest (urban planning, transportation, retail).

• This API, along with others coming soon, in addition to those already available at https://developer.orange.com, allows third-party players to offer new services.

An example of secure and fluid mobile use of generative AI with compliance analysis by video at the MWC

Read the article