A lexicon of artificial intelligence: understanding different AIs and their uses

• Having initially learned to reason with symbolic (human-readable) rules, AI in the form of statistical and connectionist models has since acquired the capacity to recognize patterns in data and develop remarkable skills via deep learning.

• We are now seeing the emergence of complex AIs, notably generative, multimodal, agentic and adaptive systems, which are combining modes of perception, and operating with increasing levels of autonomy to accomplish an ever-growing number of various and contextualised tasks. All of these innovations are bringing us closer to the as yet theoretical goal of the creation of a general AI, which will be able to reason and reproduce human cognition.

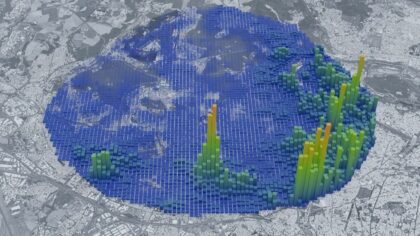

• This lexicon lists in the order of their appearance different types of artificial intelligence and modes of interaction with AI, which are now being integrated in the architecture of complex systems. Orange utilizes all of these variants and interfaces in several hundred telecom operator use cases, to improve the quality of networks, enhance customer experience and boost operational efficiency.

Read the article

• We are now seeing the emergence of complex AIs, notably generative, multimodal, agentic and adaptive systems, which are combining modes of perception, and operating with increasing levels of autonomy to accomplish an ever-growing number of various and contextualised tasks. All of these innovations are bringing us closer to the as yet theoretical goal of the creation of a general AI, which will be able to reason and reproduce human cognition.

• This lexicon lists in the order of their appearance different types of artificial intelligence and modes of interaction with AI, which are now being integrated in the architecture of complex systems. Orange utilizes all of these variants and interfaces in several hundred telecom operator use cases, to improve the quality of networks, enhance customer experience and boost operational efficiency.

Protection against quantum computing threats now within grasp for companies and institutions

Read the article