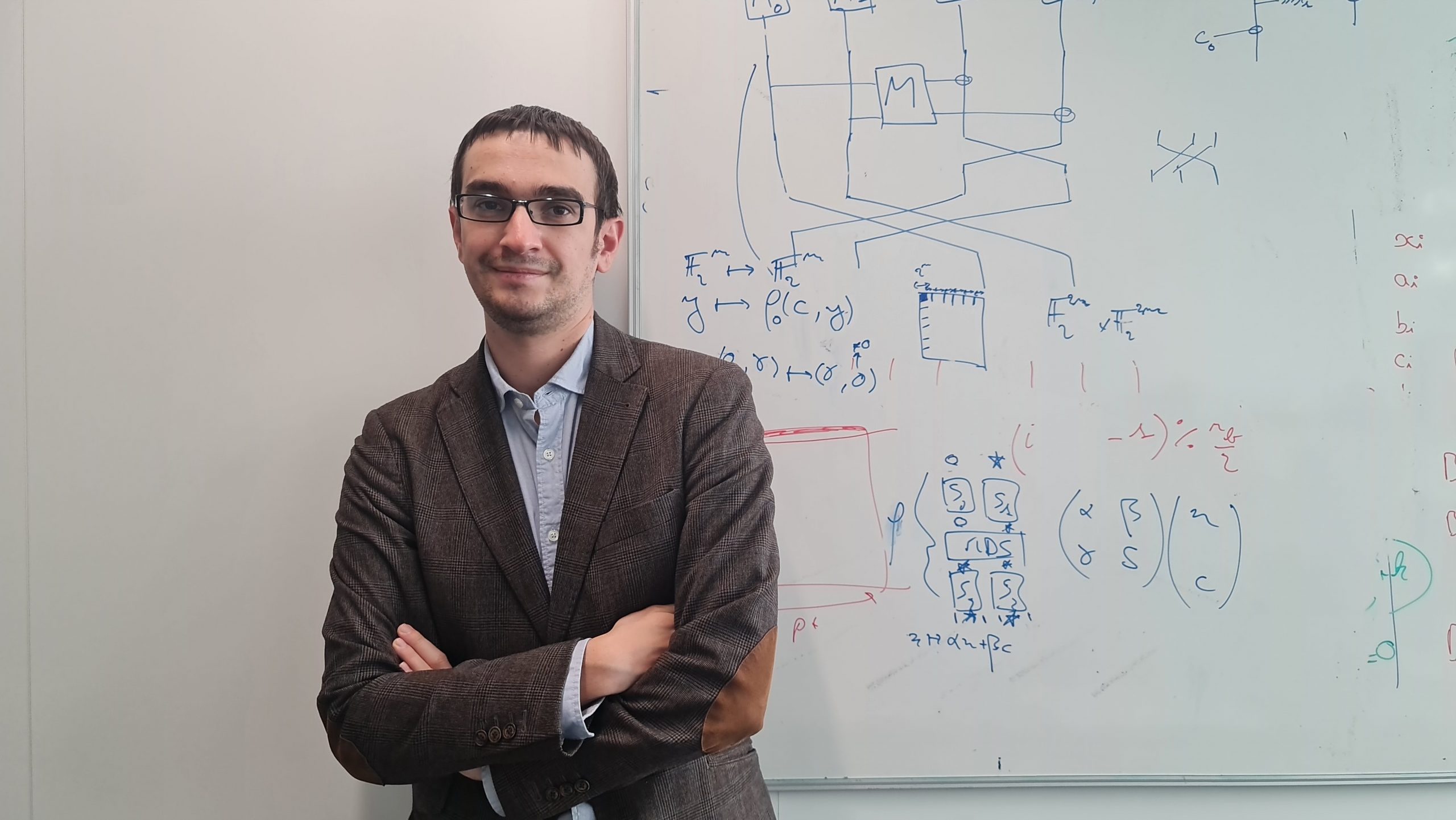

In 2016, researchers from the Weizmann Institute of Science in Tel-Aviv, Israel, managed to take control of some Philips smart bulbs and demonstrate the catastrophic consequences of such a hack. They describe a chain reaction implicating devices connected to the ZigBee network having reached a critical mass in a city such as Paris; an attack that starts with one lightbulb, then spreads throughout the city in a few minutes, making it possible to turn all the city lights on or off, or to exploit them to launch a massive distributed denial-of-service (DDoS) attack… “We cannot afford to have connected objects, no matter how trivial they are, that are not secure”, states Léo Perrin, a research fellow in symmetric cryptography within the COSMIQ team at Inria (the French National Institute for Research in Digital Science and Technology), and co-author of several lightweight cryptography algorithms. This field of research is committed to building low-cost cryptographic systems that are optimized (in particular) for the small devices of the Internet of Things (IoT). Explanations.

What issues does the generalization of connected objects raise in terms of cybersecurity?

It raises the double issue of privacy and security. Firstly, a connected object collects personal data that can be sent to the manufacturer’s servers. This can, by design, infringe on personal privacy. Secondly, even if the object has been designed to protect confidentiality, the data are still collected and stored. They flow through an internal network, within a house for example, where various objects can communicate together. These objects must be secure in order to avoid being hacked and the data getting out in the open.

Lightweight cryptography does not offer a lower level of security, but it takes into account the limitations specific to Internet of Things small devices when building the algorithms.

This is not immediately striking when thinking of a connected hairbrush, but securing these types of devices is crucial because they can become gateways into the network to which they are connected and be used to carry out more elaborate attacks, as demonstrated by the Israeli research team.

Why are traditional cryptographic algorithms unsuitable for the small devices of the IoT? What is meant by “platform constraint”?

Current cryptographic standards were written for computers. The algorithms are optimized for the resources available on a PC, or even a smartphone. But connected objects have limited resources, energy and power in particular, and the implementation of traditional algorithms can hinder their performance, meaning their production and running costs, because it requires additional resources and electricity consumption.

However, performance constraints are essential in cryptography; it is not enough to build algorithms that secure communications, it is also necessary to ensure they are used in practice, and therefore reduce their costs as much as possible. For an RFID tag, for example, this means reducing the cost of the electronic circuit by reducing the number of logic gates. For a microcontroller, it will mean a reduction in the number of instructions needed for an encryption or authentication operation.

But a microcontroller is different from a processor in a computer. Many operations that seem obvious (including essential operations in cryptography) are not present, or they cost more on a microcontroller. Therefore, not only are the performance constraints stronger on Internet of Things devices, but also, the actual operation costs model is different.

In addition, these objects are more likely to be subjected to physical attacks. To hack a badge equipped with an RFID chip, the attacker may attempt to read the cryptographic key directly in the electronic circuit or, for example, analyze the variations in power consumption. This is what is called a “side channel attack”. Ideally, lightweight cryptography will aim to develop algorithms that are less vulnerable to this kind of attack, or at least, that enable more secure physical implementation.

Precisely, how do you define lightweight cryptography?

This is one of the great difficulties of this field (including for NIST): cryptography constantly seeks “lightness”, that is to say providing maximum security for minimum cost. During my thesis, I suggested to define lightweight cryptography as a cryptography that is explicitly dedicated to particular niches. For me, what ultimately defines it is the lightness of the platforms on which it must be implemented. For example, an RFID tag has a “lighter” computing power. What’s more, lightweight cryptography allows compromises that could not be allowed in “normal” cryptography. One can, for example, consider that attackers have access to less encrypted data (a quantity crucial to their attack) when they target an RFID tag rather than a PC because it can encrypt much less text in a given time. Lightweight cryptography does not offer a lower level of security, but it takes these limits into account when building the algorithms. For this to be possible, I am convinced that we must concentrate on certain niches and develop specialized algorithms that can meet the specific constraints of these niches.

What are they? Did you identify use cases during your thesis or your search within the COSMIQ team?

The idea behind this notion of niches it to say that it is not possible to have a single algorithm that is excellent everywhere. Yet, that is what is being researched in lightweight cryptography, to lower the barriers to the adoption of encryption in constrained devices. I therefore think it is necessary to identify use cases where we cannot use AES for performance reasons and build efficient algorithms in these cases, which implies having several. The exact specification of these niches has not yet been decided, but RFID tags and microcontrollers each represent one.

Could you describe the work carried out by the COSMIQ team in the field of lightweight cryptography?

COSMIQ’s research work mainly concerns the design and security analysis of cryptographic primitives, which are elementary mathematical objects that carry out basic functions such as block cipher. For my part, I am working on symmetric primitives, but some colleagues are working on public-key cryptography, post-quantum in particular, and others on quantum cryptography. Lightweight cryptography has kept us very busy recently because we are contributing to NIST’s standardization work in this field. We have submitted several algorithms, including Sparkle, one of the competition’s ten finalists, and have performed a lot of cryptanalysis by breaking algorithms (see for example our attack on Gimli and the one on Spook, co-designed by a member of my team).

What approach was selected by NIST?

NIST “officially” launched a reflection process on the standardization of lightweight cryptographic algorithms in 2015 and attempted to obtain profiles (a description of the different niches) from industry. This did not work out, industrials were reluctant to share encrypted data (relating to their product’s needs in terms of electricity consumption or the expected level of security, for example) for trade secrecy reasons. NIST then initiated a standardization process in 2018, with a call for submissions including two specifications corresponding to two profiles: material platforms (electronic circuits such as RFID tags) and microcontroller type software platforms. They received over fifty submissions. Today there are only ten candidates left, these include both generalist algorithms (Ascon, for example) and specialist algorithms (like Grain-128AEAD). Sparkle, the algorithm I co-wrote and that is based on another algorithm, SPARX, conceived during my thesis in Luxembourg, is optimized for microcontrollers, meaning the operations used are ones that microcontrollers can perform very well.

The choice between a generalist algorithm and specialist algorithms is a very difficult one as both approaches have good arguments. The risk, in opting for a single algorithm, would be that of having a second AES, that is to say an algorithm that is good all round (sometimes even very good) but that will not be excellent in the most constrained cases. The final decision will be given by NIST at the end of the year.

AES

AES