"Affective computing is a discipline that seeks to improve how we interact with digital tools. It specifically relies on recognising users’ emotions."

Introduction

Inspired by Isaac Asimov’s novel “The Positronic Man,” the film “Bicentennial Man” tells the story of Andrew the robot, played by Robin Williams, who is gifted with a unique mind. The robot evolves over time, developing more and more human abilities. In this film, the robot’s first wish for its transformation is to get a more expressive face so that it can communicate the emotions it feels to humans. This work of fiction illustrates the essential role of emotions in communicating, whether for a biological or a mechanical being. In reality, even if we are a long way from being able to turn our computers and smartphones, like Andrew, into machines that have emotions, we can at least wonder how these devices would affect our interactions.

As a Telecom operator, Orange aims to offer an optimal digital communication experience. However, while digital communication has many benefits, it has the disadvantage of cutting us off from some of the ways in which we interact. There are abilities that are necessary to interact, especially through our emotions. Yet these are essential to ensure that you adapt to the person you’re communicating with, to adjust your speech or message, whether in the context of personal or professional communications or for customer service.

Indeed, in recent years, research into the link between emotions and new technologies has seen a major upturn, to the point of becoming a fully fledged discipline: affective computing. Thanks to its position in digital communications, Orange has the means to contribute to this area where there are still many unknowns. Moreover, today there are promising new ways to recognise our emotions using artificial intelligence. What are they? What are the challenges that need to be addressed? What about the limits?

I – Spotlight on the science of emotions.

What is an emotion?

The origin of the word emotion comes from the Latin “emovere,” where the e means “out of” and “movere” means “movement”. This etymology enlightens us about the strong link between emotions and the body and their tendency to be externalised [1], causing us to blush when we are ashamed and our hands to tremble when we are scared. Emotions are part of our everyday life, we’ve all experienced them. However, they are tricky to define.

To date, there is no unanimity on a definition of emotion. Just as we don’t tend to discuss taste or colour, emotions are, by nature, unique experiences that are difficult to generalise. Nevertheless, emotion is essential to understanding how humans work. In this regard, there has been prolific research over the past two centuries, and researchers have put forward a significant number of definitions and theories to try to explain emotions. The concept brings together many disciplines such as cognitive psychology, social psychology, ethology (the study of animal behaviour) and, more recently, neuroscience. Each of these disciplines has contributed theories, models and empirical findings to the subject.

In this complex scenario, the choice of a theoretical model is crucial for structuring all the work and knowledge. Consequently, the multi-component approach is one of the most widely used by researchers. The result is the following principle: emotion is an abstract concept, the existence of which can only be discerned from evidence, which is perceptible and concrete [2]. What is this evidence?

There are five categories, or facets of emotions, which we will present using the following example: “A walker is walking quietly in a forest and crosses the path of a bear”.

- The psycho-physiological response: these are bodily displays such as heart rate, sweating, and secreting hormones like adrenaline, serotonin or dopamine. When crossing a bear’s path, the walker will react and you can then see that the walker’s body is trembling or that they are sweating much more than usual.

- Motor expression: this facet includes behavioural reactions such as facial expressions, changes in voice or gestures. It is easy to imagine the walker’s face muscles tensing up to express fear.

- Preparation for action: this is the mobilisation of resources to take action that is appropriate for the situation. In the case of our walker, the situation will cause a desire to run away or hide, and the muscles of their body will prepare to carry out this action.

- Subjective feeling: this corresponds to becoming aware of the changes in your body and the experience that you are having. If the walker was asked how they felt, they would probably say that they were in a negative situation and that they felt bad.

- Cognitive assessment: this is about becoming aware of the current context and what it means. In our example of a stroll in the forest, the walker knows that a bear is a wild animal and therefore potentially dangerous.

So, changes in these five aspects make up what we call an emotion.

What are emotions for?

According to Darwin, who came up with the theory of evolution, emotions have an adaptive utility: they allow us to react to a situation. This would mean that the purpose of an emotion is to prepare us to take the most appropriate action to achieve our goals or avoid problems. In our previous example, all the walker’s manifestations of fear are used to initiate the action of running away or hiding. An increase in the walker’s adrenaline levels, a quickened pulse and the contraction of their muscles are all elements that will contribute to the success of their action. Of course, these facets will manifest themselves differently depending on every individual’s experience and on the context. So, where an unarmed walker sees a bear as a threat, a hunter will see an opportunity. Cognitive assessment, the last item on our list, is therefore an essential element in defining emotion, showing the strong link between emotion and cognition.

The study of facial expressions is particularly illuminating about the theory of evolution. Indeed, facial expressions play an essential role for an individual in their communication, on their current internal state and on a potential threat. For instance, if you come across a person with a terrified face, your senses will switch on and you will become attentive to the cause of that fear yourself. Thus, for humans and by extension for all social animals, emotions serve to increase the chances of survival.

How can we discern one emotion from another?

Scientists have had no shortage of imagination in suggesting categories of emotions. Each of the above facets is an element that allows us to distinguish between emotions. So, facial expressions offer an interesting clue. According to researcher Ekman [3], who contributed to the science of emotions by studying faces, it is possible to discern seven emotions from facial expressions: happiness, fear, anger, surprise, disgust, sadness and neutrality. These emotions are present across all cultures, making them innate for humans.

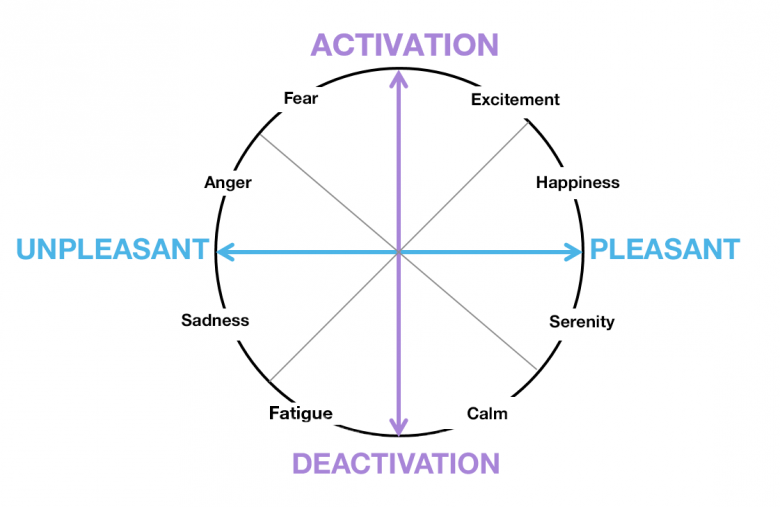

However, facial expressions are only partly a result of emotions. Some researchers therefore prefer to distinguish between emotions through the prism of subjective feeling that they provide. Many scientists characterise emotions in a multi-dimensional space. Russell [4], for example, proposes a two-dimensional scale to represent a wide range of emotions:

valence, which corresponds to the positive or negative tone of the emotion experienced. For example, happiness is an emotion with positive valence and sadness is an emotion with negative valence.

Arousal, which corresponds to the energy that emotion brings to our body. Relaxation is an emotion that involves little energy. Therefore, it has weak arousal. By contrast, euphoria indicates strong arousal. And yet, both emotions have a positive valence.

Figure 1- Russell’s Circumplex

Between Ekman’s faces and Russell’s dimensions, there is a wide variety of more or less complex models to represent emotions. As there is no perfect model, these must be chosen and adapted depending on the context.

II – Affective computing, at the crossroads between humans and machines.

How can machines be given emotions?

Now that we have clarified the notion of emotions, the question of how they are linked to new technologies arises. Science fiction has often dreamt about the future of machines and the human skills they might acquire. Like the bicentennial man, one imagines robots with affective skills that can empathise with humans or express their own feelings. Except that at the moment, it is hard to imagine how emotions could fit into our environment, and even more so how users could benefit from this. How could a machine based on principles that are a long way from living biology and that looks nothing like a human being feel and express emotions?

According to Roselin Picard [5], founder of MIT’s affective computing research group, emotions do have a place in the interactions between machines and users. They are even one of the missing links for computing to become truly smart and adapt to humans. Indeed, emotions and understanding them help us to be adaptable. So why shouldn’t it be the same for machines?

Affective computing is a discipline that aims to integrate emotions into how we use new technologies. It involves studying and developing ways to recognise, interpret and model human emotions in our use of and interactions with technology. The approach is highly multidisciplinary, involving psychology, cognitive science, physiology and computer science.

This multidisciplinary nature reflects the multi-dimensionality of emotional processes. Indeed, there are many ways of detecting emotions and these have developed in recent years with the increase in the amount of user data available. Moreover, current advances in artificial intelligence automate the processing of large amounts of data, making it easier to make progress in this field. In his review of the different methods for detecting emotions, Poria [6] estimates that 90% of the research on detecting emotion focuses on these three main axes:

- Visual, with the recognition of emotions through facial expressions and gestures.

- Audio, with the analysis of the intonation in a person’s voice.

- Text, studying the words used in a text to extract the emotions expressed.

None of these approaches is perfect, with the ideal being to combine these different sources of information to increase the chances of recognising the right emotion. However, detecting a person’s emotion with certainty remains a hurdle. Current techniques offer only a partial view of the various elements that can be garnered. Just as it is hard to know exactly what our loved ones are feeling, machines can only infer an emotional state from outward signs. Modesty is therefore called for with regard to the ability to automatically decode emotions. The research prospects are vast and still involve overcoming numerous methodological and technical sticking points. Of course, it is not possible to read users’ thoughts to extract their emotions, and would this be desirable anyway?

What are the implications for Orange?

In the Orange ecosystem, which is a world of communications driven by digital technology, the issue of integrating affective computing is vital. And the Covid-19 crisis has only reinforced this situation. In fact, communicating by telephone, chat or email cuts us off from a large part of how we communicate and express our emotions. As remote working becomes more common and personal and business relationships are increasingly conducted at a distance, making yourself understood has become a major issue and requires extra effort. When communicating remotely, maintaining a good level of understanding involves having to overcome the loss of direct information that is usually available during face-to-face communication. This adaptation entails an additional mental load and fatigue, which can lead to frustration between the speakers. Emotions play a central role in adapting to the other person, and they are expressed largely by non-verbal information. So we need to reintegrate this dimension of sensitivity and empathy into our modes of communication in order to reduce the digital distance between humans (which is partly what emojis allow for).

Customer service is particularly interesting for this line of research. Indeed, communication in the field is increasingly mediated by asynchronous digital chat or email tools. Customer expectations are very high and they want the most satisfying experience possible, to feel reassured and understood by advisers. So, to help advisers meet customers’ expectations, incorporating emotions into the tools seems to be an interesting avenue. And in the context of automated after-sales service, the question can also be applied to chatbots, in order to better adapt them to interactions with customers. Finally, the automatic processing of large volumes of customer expressions would benefit from including an interpretation of their emotions to better understand their needs.

In this context, in the field of voice emotion detection the Orange group has been working with the startup Empath [7], which suggests distinguishing four emotions —joy, calm, anger and pain— from vocal attributes.

III – Towards new horizons with human-machine interactions.

Smartphones, the new promised land.

Although most of the approaches explored by science focus on visual, audio or text, developing other ways to harvest emotional information is a promising prospect. Capturing images, or voice, during customer/adviser interactions poses problems of ethics and acceptability. Moreover, if one goes solely on a text, the risk of errors is very high, with the difficulty of effectively distinguishing a direct expression from a figurative one (irony, humour and so on) without any context.

At Orange Labs, we are conducting studies to gather emotions from user behaviour, using data from smartphones, which offer interesting data collection prospects. Not only is their use very widespread, they also have a wide range of sensors that make it possible to record user reactions. When interacting with a chat application, it is possible to record the speed of typing on the keyboard. There are also inertial sensors on smartphones, which capture a user’s movements on their device. These are used in fitness apps to determine which physical activity the user is doing — walking, running, cycling, etc. Since motor expression is one of the five fundamental aspects of emotions, we assume that it will be possible to detect it through the movements resulting from interactions with a smartphone.

Studying emotions in the lab.

As the approach is new, it is first necessary to create a set of smartphone interaction data (accelerometer, gyroscope, keyboard strike) associated with emotions. This essential step has to be carried out in a laboratory with an experimental protocol using the induction of emotions. Creating emotions in the lab is a particularly complex task, requiring the use of videos, images or games calibrated to cause measurable positive, neutral or negative reactions in participants.

The experiments put in place aim to combine three of the five elements cited at the beginning of this article: motor expression, subjective feeling, and psycho-physiological data. Motor expression is the variable we are trying to measure from users’ interactions with smartphones. In addition, subjective feeling and psycho-physiological data allow the emotions that are generated through the experimental protocol to be checked or adjusted. Indeed, from one individual to another, reactions to a cute little cat or a skateboard accident video differ. It is therefore important to assess the user’s feelings both on the basis of appropriate questionnaires, and also by measuring their physiological responses with specific tools.

In order to create this dataset, we have developed a dedicated Android app that has three functions: to provoke emotions, to ask questions about the emotions felt, and to record reactions using sensors (the inertial sensors mentioned above and keyboard strikes). The app is in the form of a chatbot that acts as an experimenter and interacts with users by offering to perform simple and engaging tasks. At the same time, we record physiological reactions using dedicated hardware.

Artificial intelligence aids research.

The protocol we established allowed us to collect data of a differentiated nature in order to infer emotions. How can such diverse data be handled to analyse it logically? As this field of research is innovative, there are no specific methods for processing such data. However, as discussed earlier, the data collected are similar to those used by fitness apps to recognise physical activity. We have therefore drawn inspiration from work in this field. Here, artificial intelligence methods such as machine learning no longer need to be proven, because they allow complex data to be analysed automatically. As a matter of fact, from data formatted upstream, machine learning models are able to find what differentiates walking from running, for example. We apply the same method, labelling emotions rather than physical activities.

The next question is how reliable the models are. They must therefore be validated on different data corpora from the same sample, with the objective being to determine how well the models classify the data. The results of a first experiment were presented at the International Conference on Human interaction and Emerging Technology (IHIET 2020) [8]. What this first experiment highlighted were differentiated trends in signs depending on the emotion induced. At the same time, it is still necessary to consider new methods of inducing emotion in order to increase these effects.

Conclusion: the three major challenges of affective computing.

The automatic detection of emotions is a three-fold challenge for scientific research. First, it is a theoretical challenge, with significant reflection needed to determine the most appropriate emotional models, depending on the context. Second, a methodological challenge must be met for the creation of experimental protocols that make it possible to collect data relevant enough to provide a solid basis, either in the laboratory or in real situations. Lastly, there remains a technical challenge posed by the emergence of artificial intelligence and the appropriate application of its models to data processing.

Several research projects at Orange are attempting to address these three challenges, exploring a variety of data sources: facial expression, voice parameters, subjective declaration and smartphone interactions. Our work opens up important perspectives in terms of methodologies for exploring this new area. So new experiments are being undertaken, which will enable the validation of innovative protocols for detecting emotions. It will then be important to increase their reliability and adapt them to customer relationship contexts to improve the quality of interactions.

Bibliography