“A predeployment internal audit makes it possible to intervene proactively rather than reactively, and to anticipate any potential bugs or risks.”

Transparency of algorithms is an essential component of an ethical and responsible artificial intelligence. The concept has emerged in response to the growing use of AI systems in sensitive areas and their impact on the lives of individuals as well as on society as a whole.

While AI is a powerful tool for fighting inequality, there are many examples of cases in which machine learning models and datasets can reproduce, or even amplify, biases and discriminations. In the United States, Amazon’s recruitment tool or the COMPAS justice software are illustrations of just this.

In order to pinpoint the origin of errors, correct them, and detect risks during the development phase of an AI, it is necessary to be able to monitor and test algorithms throughout their entire life cycle, thanks to a systematic and documented process. In other words, it is necessary to be able to audit them.

The two parts of auditability

Complementary to the notion of explainability, auditability describes the possibility to evaluate algorithms, models, and datasets; to analyse the operation, results and effects, even unexpected ones, of AI systems. This notion is made up of two parts.

The technical part consists in measuring the performance of a system according to several criteria (reliability, accuracy of results, etc.). The ethical part consists in apprehending its individual and collective impacts, as well as checking that it does not pose a risk of breaching certain principles, such as privacy or equality.

For example, the non-discriminatory nature of a machine learning algorithm will be tested by providing it with fictitious entry data or user profiles.

External and internal audits

Although many studies underline the importance of external audits carried out by third parties after deployment of the model, what’s interesting about the method proposed by the Google AI and Partnership on AI researchers in the article entitled “Closing the AI Accountability Gap” is that it is about internal auditing upstream of development and throughout the whole design phase.

External audits are independent and are more of a response to the need to establish certified controls of probative value. However, they are intrinsically limited by a lack of access to internal processes and information – such as source code or training data – which are sometimes subject to commercial confidentiality.

In effect, companies such as Google, who have invested heavily in the development of AI systems, are reluctant to divulge this information to external auditors. Thus, the protection of intellectual property and of business confidentiality represents a major obstacle to transparency.

A predeployment internal audit makes it possible to intervene proactively rather than reactively, and to anticipate any potential bugs or risks. It complements the external audit and improves transparency thanks to the production, at each stage of product development, of a certain number of documents that can be consulted by the external experts.

The SMACTR method, a five-act play

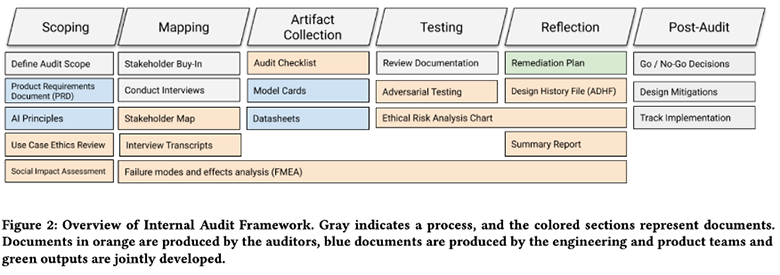

Inspired by practices from other industries, the audit framework proposed by Google AI and the Partnership on AI can be split into five stages and is based on sets of documents called “artifacts” that are produced by the auditor and the development teams. It is the SMACTR method, for Scoping, Mapping, Artifact Collection, Testing, and Reflection.

The aim of this scoping stage is to define the audit’s scope by examining the motivations and intended impact of the system, and by confirming the principles meant to guide its development. This is done via an ethical review, which must include a variety of viewpoints to maximise the limitation of “coding” biases, and via a social impact assessment. The purpose of this phase is to answer questions such as “How can using the system change individuals’ lives?” and “What are the potential social, economic, and cultural prejudices?”.

The mapping stage consists in analysing information about the different stakeholders and about the product’s development cycle. In particular, it is based on a map of the various collaborators, a review of the existing documentation, and on the results of an ethnographic field study (performed by carrying out interviews with the organisation’s key people). This study must enable a better understanding of how certain decisions, such as the choice of dataset or of the model’s architecture, have been made and how they will influence the system’s behaviour.

During the third stage, called the collection stage, the auditor draws up an inventory of all the documents supposed to have been produced during development and that are needed to start the audit. This includes model cards and datasheets, two complementary standards aiming to improve the auditability of algorithms. Model cards describe the performance characteristics of the model. Datasheets look in particular at the data collection process and are intended to help the dataset user make informed decisions.

Then, during the testing stage, the auditors interact with the system to assess whether it is compliant with the organisation’s ethical values. They can for example, taking inspiration from contradictory examples (where the system is “tricked” by being fed fake entry data so as to observe the results produced), submit fake user profiles, in particular from groups, to check if it produces biased results. This stage also contains an ethical risk analysis chart, which considers the combination of the likelihood of a failure (estimated according to the occurrence of certain failures observed during testing) and its severity (evaluated during the previous stages) so as to define the importance of the risk.

Finally, the reflection stage consists in confronting the results obtained with the pre-defined ethical expectations and presenting a risk analysis describing the principles that could be threatened upon deployment of the system. The auditors will then suggest a plan to mitigate these risks, the objective being to achieve a pre-defined acceptable risk threshold. For example, if auditors discover unequal classifier performance across subgroups, what level of parity must be reached?

The “audit-washing” pitfall

As is highlighted by the Google AI and the Partnership on AI researchers in their article, internal auditing has certain limits, which are mainly linked to the difficulty for the internal auditor to remain independent and objective during the execution of their mission. Just as AI systems are not independent from their developers, “the audit is never isolated from the practices and people conducting the audit”.

In order to prevent this from becoming a simple marketing tool, aimed at providing a misleading picture of corporate responsibility, auditors must be aware of their own biases and opinions.

Broadly speaking, the audit process must be slow, meticulous, and methodical, which is a stark contrast to the typical development speed of AI technologies. It can even lead to discontinuation of the development of the system being audited when the risks outweigh than the advantages. However, it is a necessary process, both to guarantee reliability, loyalty and fairness of the algorithms, and to enable their social acceptability.

Closing the AI Accountability Gap: Defining an End-to-End Framework for Internal Algorithmic Auditing: https://arxiv.org/pdf/2001.00973.pdf

Founded in 2016 by Amazon, Facebook, Google, DeepMind, Microsoft, and IBM (joined by Apple in 2917 and Baidu in 2018) the Partnership on AI is an international group of experts from academia, civil society, and industry. It was set up to develop the best practices of AI technologies, to help further public understanding in this area, and to serve as a discussion platform about AI and its impacts on people and society.